I. Multi-Agent AI Systems, TLNs, and Human Integration

In our previous two blog posts in this series (covering the LLMs Claude Sonnet and Google Gemini) we explored their logical reasoning capability using The Theory of Graceful Extensibility (TTOGE, toe-gee), revealing that they possess a genuine capacity for logical deduction and inference that goes beyond what would be expected of even an advanced ‘predictive text algorithm’.

While we have repeated the exploration with Microsoft Copilot (GPT-4), we are going to approach these explorations of both concepts (TTOGE and logical reasoning) from a wider and broader perspective, across multiple blog posts.

Specifically in this post, at the level of the AI System – of which an LLM is a component part often termed an Agent, or in the context of TTOGE a ‘Unit of Adaptive Behaviour’ (Agent) within a ‘Tangled Layered Network’ (AI System). A multi-agent AI system can be considered a TLN made up of multiple UABs across multiple layers.

As we continue this blog it is important to keep in mind that the UABs within such a TLN are not only the AI components of the system, but that the TLN also includes the human users and the human developers – which are the UABs that provide the inputs and oversight for the system respectively.

This is an important clarification and way of thinking about AI systems, even beyond the context of this blog – for such a system does not function without the actions of the human UABs within the TLN. For example, the LLM agents (and other UABs) will not perform their function to provide an output without first receiving some form of human input – even if that human input must first be handled by other UABs within the system (such as the user interface) before it reaches the LLM agent and the subsequent output is passed back through various other UABs before it is ‘received’ by the human user / UAB.

Before we explain why Microsoft Copilot is a good example of a multi-agent AI system and TLN with multiple UABs, lets first provide an overview of The Theory of Graceful Extensibility (TTOGE) as this provides the common terminology and basis of understanding for the concepts and topics that we explore within this blog series.

As part of our explanation and exploration within this blog we’ll also share our image creation prompting approach!

II. Understanding The Theory of Graceful Extensibility (TTOGE)

The Theory of Graceful Extensibility (TTOGE) is a conceptual framework that seeks to understand and inform the design of systems capable of adapting to varying and unexpected challenges. It is applicable across various domains, from technology to ecology, and focuses on the capacity of systems to extend their performance beyond usual operational boundaries in the face of surprise and pressure.

The Theory of Graceful Extensibility (TTOGE) describes the ability of a system to extend its capacity to adapt when faced with surprise events that challenge its boundaries. It is the opposite of brittleness, where brittleness represents a sudden collapse or failure when a system is pushed beyond its limits.

The theory, originally described in a 2018 paper by David D. Woods, aims to provide a formal base and common language for understanding how complex systems can sustain adaptability amid changing demands and how they may fail to do so.

Core Assumptions of TTOGE

TTOGE is built upon two foundational assumptions:

- A: All adaptive units have finite resources.

- B: Change is continuous.

These assumptions acknowledge the limitations of any adaptive system and the inevitability of change, setting the stage for the exploration of how systems can maintain functionality under stress.

Proto-Theorems of TTOGE

The theory is articulated through a set of proto-theorems that are derived from the two fundamental assumptions. The proto-theorems of TTOGE provide a deeper insight into the behaviour of adaptive systems, and are organised into three subsets – further details of which are provided in the following paragraphs, together with abridged versions of the proto-theorems.

Note: Proto-theorem is a term used within the original paper presenting The Theory of Graceful Extensibility (TTOGE) to describe a set of statements that are proposed as fundamentally correct, not only empirically but also provably so. These statements are considered to capture the essential characteristics of adaptive systems, and as theorems are open to further inquiry and validation. Proto-theorems are seen as foundational rules that govern the behaviour of adaptive systems, particularly those serving human purposes. They are a blend of empirical observations and theoretical assertions based on extensive observations by the author of the original paper, who encouraged rigorous testing and refinement of them in order to reach the status of formal theorems.

Subset A: Managing the Risk of Saturation (S1-S3)

This subset focuses on how systems regulate the capacity for manoeuvre (CfM) to manage and reduce the risk of reaching their limits. The subset addresses the system’s ability to handle increased loads and avoid reaching a point of failure or ‘saturation.’ It emphasizes the importance of resilience and scalability in system design. For example, in cyber-physical systems, such as autonomous vehicles or smart grids, managing the risk of saturation is crucial to ensure that the system can handle unexpected events without compromising safety or functionality.

S1: The adaptive capacity of any unit is finite, which bounds the range of behaviours and responses available to it.

S2: Surprises are inevitable and will challenge the adaptive capacity of units, necessitating a response to avoid brittleness and maintain function.

S3: Units risk saturation of their adaptive capacity, and therefore, must have strategies to extend their capacity beyond the base to handle unexpected demands.

Subset B: Networks of Adaptive Units (S4-S6)

This subset addresses the requirements for adaptive units to form networks that can collectively respond to challenges and disturbances. The subset focuses on the interplay between different adaptive units within a system. It captures the basic processes that influence how adaptive units act when a neighbour is at risk of saturation and whether units will act in ways that extend or constrict the capacity for manoeuvre (CfM) of the unit at risk. In asset management, especially in complex infrastructures like railways, this subset is applied to ensure that new technologies and changing stakeholder demands are met with continuous adaptability.

S4: No single unit can manage the risk of saturation alone; alignment and coordination across multiple units are necessary for a robust response.

S5: Neighbouring units within a network can influence the adaptive capacity of a unit, either constricting or extending it, which affects the overall adaptability of the system.

S6: Interdependent units modify pressures on a unit, which changes how that unit defines and searches for good operating points within a multi-dimensional trade space.

Subset C: Outmanoeuvring Constraints (S7-S10)

The final subset explores how systems can outmanoeuvre the constraints imposed by their environment and resources to maintain adaptability. The subset explores how systems can outmanoeuvre the constraints imposed by their environment and resources to maintain adaptability. This is particularly relevant in emergency response scenarios, where systems must adapt quickly to save lives and mitigate damage in the face of unforeseen disasters.

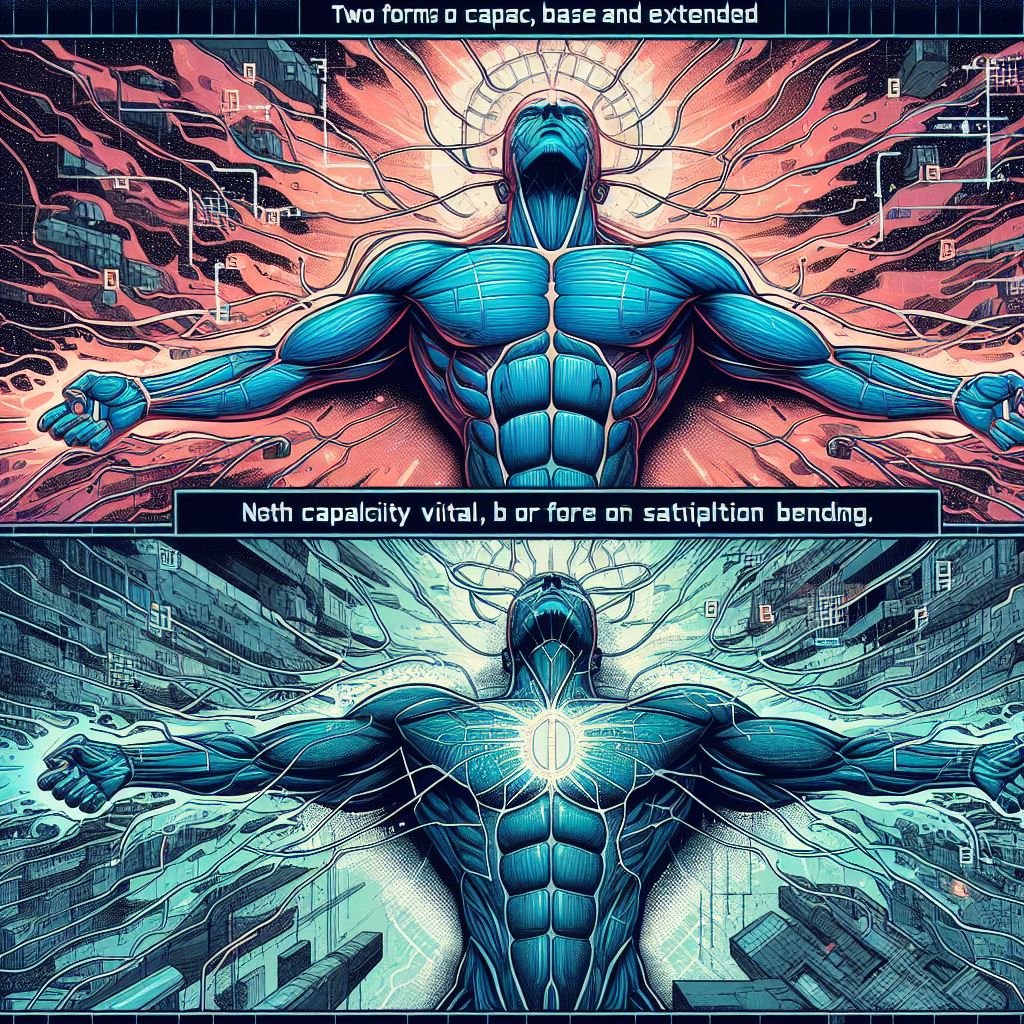

S7: There are two fundamental forms of adaptive capacity: base and extended. The performance of a unit near saturation is different from its performance far from saturation, highlighting the need for both forms of adaptive capacity to be viable and inter-constrained.

S8: All adaptive units are local, meaning they are constrained by their position relative to the world and other units in the network, and there is no universally best or omniscient position.

S9: The perspective of any unit has bounds; the view from any point of observation reveals and obscures different properties of the environment, but this limitation can be overcome by shifting and contrasting multiple perspectives.

S10: Models of adaptive capacity are often mis-calibrated, and there are limits on how well a unit’s model of its own and others’ adaptive capacity can match actual capability, necessitating ongoing efforts to improve calibration and reduce mis-calibration.

Key Terms of Reference Related to TTOGE

To navigate the theory, it is essential to understand its key terms of reference from the original paper – which are presented below:

UAB (Unit of Adaptive Behaviour): Units in a network that adapt their activities, resources, tactics, and strategies in the face of variability and uncertainty to regulate processes relative to targets and constraints. For human systems, adaptation includes adjusting behaviour and changing priorities in the pursuit of goals. UABs exist at multiple nested scales.

Fitness: Refers to the match between an organism’s capabilities and the properties of its environment. Fitness is a question, and answers to this question are always tentative, never complete. Adaptive units at all levels are able to constantly re-think their answer to the question of fitness.

Adaptive Capacity: The potential for modifying what worked in the past to meet challenges in the future; it is a relationship between changing demands and responsiveness to those demands, relative to goals. Refers to the potential of a system to adjust its functioning in response to actual or expected stimuli or stressors in relation to systems’ ability to handle variability and uncertainty, and to regulate processes relative to targets and constraints.

Range of adaptive behaviour: The range over which adaptive capacity is capable of responding to changing demands. While this term has been used to describe biological systems, it is passive in tone, whereas adaptive units are quite active.

Capacity for Manoeuvre (CfM): Range of adaptable behaviours a unit can perform (limited). CfM refers to the range or boundary over which an adaptive unit can respond to changing demands. It’s a measure of the potential for modifying what worked in the past to meet challenges in the future.

CfM Characteristics: It’s a dynamic parameter that reflects the relationship between a unit’s adaptive responses and the demands of its environment. As demands increase, the risk of saturating CfM grows, indicating a higher risk of system failure or brittleness.

CfM Regulation: Adaptive units must regulate their CfM to manage the risk of saturation. This involves monitoring and adjusting their capacity to adapt in anticipation of potential challenges.

CfM in Networks: No single unit can manage the risk of saturation alone. CfM requires synchronization across multiple interdependent units within a network, highlighting the importance of coordination and alignment to extend the collective adaptive capacity.

Saturation: Exhausting a unit’s range of adaptive behaviour or capacity for manoeuvre as that unit responds to changing and increasing demands.

Risk of Saturation: Likelihood of CfM being overwhelmed by future demands. Inverse of remaining range or capacity for manoeuvre given ongoing and upcoming demands.

Brittleness [descriptive]: Rapid fall off (or collapse) of performance when situations challenge boundaries. Sudden performance drop when situations exceed CfM.

Graceful Extensibility: Ability to expand CfM for unexpected challenges (opposite of brittleness). How to extend the range of adaptive behaviour for surprises at and beyond boundaries – to deploy, mobilize, or generate capacity for manoeuvre when risk of saturation is increasing or high.

Brittleness [proactive]: Insufficient graceful extensibility to manage the risk of saturation of adaptive capacities.

Varieties of adaptive capacity: Minimally, there are two – base and extensible.

Base adaptive capacity: The potential to adapt responses to be fit relative to the set of well-modelled changes.

Extended form of adaptive capacity: Referred to as graceful extensibility, it’s how to extend the range of adaptive behaviour for surprises at and beyond boundaries.

Net Adaptive Value: Equals the total effective range of base plus extended adaptive capacities, and represents the overall effectiveness of a system’s adaptive responses. The net adaptive value is a measure of how well a system’s capabilities and the properties of its environment match, considering both the system’s inherent adaptive behaviours and its ability to extend beyond these when faced with surprises or challenges.

Surprise: Unexpected event that challenges existing plans/models. Events which will occur that fall near and outside the boundaries of adaptive capacity, thus surprise is model surprise where base adaptive capacity represents a partial model of fitness.

Potential for Surprise: This concept relates to the likelihood and nature of future events that may challenge a system’s pre-developed plans and algorithms. It’s tied to the next anomaly or event that will be experienced and how that event may test the system’s preparedness. The potential for surprise is a reflection of the system’s model of fitness and its boundaries, indicating that there will always be events that fall near or outside these boundaries, thus leading to model surprise.

Tangled Layered Networks: Complex, interconnected networks of UABs. The theory addresses layered networks as defined in the referenced works, with the descriptor ‘tangled’ emphasizing that the network interdependencies are very hard to map, messy, change, contingent, and often hidden from view.

Additional Terms of Reference for TTOGE

In several of our previous blog posts we have expanded on the terms of reference for TTOGE based on both content from the original paper and observations from various domains and disciplines, with some of the key additional terms briefly explained as follows:

Adaptive Universe: The entirety of adaptive systems, highlighting the dynamic nature of adaptation across different scales and contexts. Refers to the concept that the universe is a dynamic system constantly adapting to changes within and around it.

Sustained Adaptability: The ability of a system to continue evolving and adapting over time in response to external and internal pressures. The ongoing ability of a system to evolve and respond to changes, crucial for long-term success in environments like cyber-physical systems where technology and user needs are constantly evolving.

Ripple Effects: The cascading impact of an event or action within a system, which can lead to far-reaching consequences beyond the initial scope. Understanding ripple effects is essential in asset management to anticipate and mitigate the spread of failures across interconnected systems. The concept is embodied across domains and disciplines, such as the ‘Butterfly Effect’ within chaos theory.

Unintended Consequences: Outcomes (both positive and negative) that are not foreseen or intended, often resulting from complex interactions within a system, and which is closely related to the concept of ripple effects. Emergency response systems must be designed to minimize negative unintended consequences while maximizing positive ones.

Graceful Degradation: The design and operating principle where systems maintain functionality, even when parts fail or operate at reduced capacity. A form of base adaptive capacity, given that it deals with the foreseeable risk of saturation. This concept is integral to the resilience of cyber-physical systems as well as within the domain of web design and the operation of the internet.

Fitness Landscape: A metaphorical representation of how different variables or states of a system affect its overall ‘fitness’ or viability. For example, asset management utilizes this concept to optimize the performance and longevity of assets.

Continuous Learning: The ongoing process of learning and skill development, which is essential for systems and individuals to remain relevant and effective. Continuous learning (or rather, continuous improvement) is a key principle in the asset management discipline (and several other management disciplines), emphasizing the importance of ongoing education and skill development to adapt to the ever-changing landscape of asset management.

These refined explanations and applications of TTOGE’s subsets and additional terms provide a clearer understanding of how the theory can be applied to enhance the adaptability and resilience of complex systems across different domains.

The Importance of TTOGE

TTOGE offers a lens through which we can examine and design systems that are not only resilient but also capable of extending their functionality gracefully when faced with unforeseen challenges. By understanding these principles, we can create systems that are robust, adaptable, and prepared for the complexities of the real world.

TTOGE has been a focus of our blog series for several reasons:

It is a novel and domain-agnostic theory that describes how systems of varying complexity achieve sustained adaptability – in short, it describes in a highly effective manner both how to design, and how to understand, systems that work (or to diagnose why they don’t work).

Being domain-agnostic it can readily act as a Rosetta Stone that translates concepts and understanding across all domains, disciplines, and contexts – it enables the effective and reliable blending of ontologies (domains of knowledge) by using the assumptions, proto-theorems, and terms of reference as a basis for understanding and translation – one of the original goals of David D. Woods in developing TTOGE.

It was developed through logical reasoning informed by observations and experience from numerous real-world scenarios by experts in various domains such as resilience engineering, human factors, cognitive science, and complexity science to name a few – thereby making it an empirically, objectively, and logically sound scientific theory whose conceptual basis can be extended to numerous other domains of knowledge.

It is highly relevant to understanding modern AI technologies and human interactions with such technologies as the development of TTOGE was largely informed by work in the field of Macro-Cognitive Work Systems (MCWs) – that is, systems that perform cognitive work through both human and technological components.

It is a theory and domain that, for various reasons, is verifiably (after extensive and rigorous investigation, given the training data of the major LLMs is not transparently available) not incorporated within the training data of any of the major LLM’s and nor is any significant information on TTOGE accessible to any LLM via the internet due to details of it been secure behind academic paywalls or sites that require human-verifiable accounts to access.

As such, TTOGE provides a useful foil through which to investigate and assess various capabilities of LLMs beyond current approaches based in rote learning – its basis in logical reasoning and its domain-agnostic nature, in particular, allows us to reliably probe and evaluate such foundational intelligent capabilities in LLMs in an explainable and repeatable manner.

III. What is a Multi-Agent System?

Now that we have provided an overview of The Theory of Graceful Extensibility (TTOGE) and established the common terminology and basis of understanding for the concepts and topics that we explore within this blog series, let’s return to multi-agent AI systems and why Microsoft Copilot is a good example of a TLN with multiple UABs.

In the context of artificial intelligence, a multi-agent system (MAS) refers to a network of autonomous agents that interact and collaborate to achieve specific objectives within a shared environment. These agents are capable of autonomous decision-making and can communicate with each other to coordinate their actions. The key characteristics of a MAS include:

Autonomy: Each agent in the system has the ability to operate independently and make decisions based on its own perceptions and knowledge.

Local Views: No single agent has a full global view of the system; instead, each agent has its own perspective, which may be limited or partial.

Decentralization: There is no central controlling agent; the system’s behaviour emerges from the interactions between its autonomous agents.

Cooperation: Agents can work together to achieve common goals, which often involves sharing information, dividing tasks, and synchronizing actions.

Flexibility: MAS are adaptable to changes in the environment and can handle dynamic situations where new agents can join or leave the system.

MAS are particularly useful in complex, dynamic environments where tasks are too complicated for a single agent to handle or where multiple perspectives are needed. They are applied in various fields such as robotics, distributed computing, traffic control, and automated trading systems.

Viewing Multi-Agent Systems (MAS) through the lens of The Theory of Graceful Extensibility (TTOGE) provides a unique perspective on how these systems can be designed and understood for optimal adaptability and resilience. Here’s an overview of how TTOGE principles apply to MAS:

Finite Adaptive Capacity (S1): In MAS, each agent has a finite range of behaviours and responses. TTOGE reminds us that the collective adaptive capacity of the system is also finite, and thus, the design must account for the limits of each agent’s capabilities.

Surprise and Response (S2): MAS must be designed to expect the unexpected. Agents should be capable of responding to surprises that challenge their adaptive capacity, which is a core tenet of TTOGE.

Risk of Saturation (S3): Agents within a MAS can become saturated with too many tasks or too much information. TTOGE suggests that systems should have mechanisms to extend adaptive capacity to manage this risk.

Alignment and Coordination (S4-S6): No single agent can handle all challenges alone. TTOGE emphasizes the need for coordination and alignment among agents, ensuring that they work together effectively to manage saturation and adapt to pressures.

Base and Extended Adaptive Capacity (S7): MAS should have both base and extended adaptive capacities. Base capacity handles regular operations, while extended capacity is used for unexpected challenges. TTOGE highlights the importance of these two forms of capacity for system viability.

Local Constraints and Perspectives (S8-S9): Each agent in a MAS has a local view and is constrained by its position and capabilities. TTOGE encourages the design of systems where multiple perspectives are used to overcome the bounds of individual agents’ views.

Calibration of Models (S10): Agents in MAS often operate based on models of their environment and other agents. TTOGE points out that these models are frequently mis-calibrated and require ongoing efforts to improve their accuracy.

In summary, TTOGE provides a framework for both understanding and designing MAS that are robust, adaptable, and capable of extending their functionality gracefully when faced with unforeseen challenges. It emphasizes the importance of understanding the limitations of agents, the necessity of coordination, and the value of maintaining a dynamic balance between base and extended adaptive capacities – and the principles of TTOGE are entirely complimentary and coherent with the established understanding and perspectives upon MAS in the domain of Artificial Intelligence.

IV. Microsoft Copilot as a Multi-Agent AI System and TLN

Microsoft Copilot serves as an illustrative example of a multi-agent AI system that operates within a Tangled Layered Network (TLN). At its core, Copilot is composed of various AI components, each acting as a UAB, that work in concert to provide intelligent assistance to users. These components range from language understanding models to search algorithms, all intricately linked to form a cohesive system.

While Microsoft Copilot was not explicitly designed with The Theory of Graceful Extensibility (TTOGE) as a guiding framework, it inadvertently embodies many of the theory’s principles and proto-theorems. This serendipitous alignment offers a unique lens through which to examine Copilot as a multi-agent AI system and its operation within a TLN.

The Role of UABs in Copilot’s TLN

Copilot’s architecture, although not intentionally based on TTOGE, demonstrates the theory’s concepts in action. For example, the system’s various AI components, each a Unit of Adaptive Behaviour (UAB), showcase the finite resources (A) and continuous change (B) inherent in adaptive systems. These components work together, aligning and coordinating (S4) to manage the risk of saturation and maintain system resilience.

Within Copilot’s TLN, each UAB has a specific role, contributing to the system’s overall adaptive capacity. For instance, the language models interpret user input, the search algorithms retrieve relevant information, and the interface agents facilitate user interaction. These UABs are designed to adjust their behaviour in response to the continuous change (B) and within the finite resources (A) they possess.

Adaptive Capacity and Capacity for Manoeuvre

Copilot’s adaptive capacity is evident in its ability to handle a wide range of user queries, from simple informational requests to complex problem-solving tasks. Its Capacity for Manoeuvre (CfM) is showcased when it navigates through the multi-dimensional trade space of possible responses, selecting the most appropriate one based on the context provided by the user.

The user can also directly influence the levels of base and extended adaptive capacity of certain UABs and the overall TLN by selecting the ‘tone’ of the conversation – creative, balanced and precision – which alters which UABs within the system provide their capabilities during the interaction, e.g. in ‘creative’ tone GPT-4 is the LLM leveraged, while in other modes a different LLM (or the same LLM with different settings upon certain parameters such as ‘temperature’ – the variability / creativity within responses to the same input) may be used with different degrees of base and extended adaptive capacity.

Managing the Risk of Saturation

To manage the risk of saturation, Copilot relies on the alignment and coordination (S4) of its UABs. When one component reaches its limits – i.e. the requirement for a capability, such as image creation, that is beyond a specific UABs (such as an LLM UABs) capabilities – others step in to extend the system’s adaptive capacity. This collaborative effort ensures that Copilot maintains its performance even as demands increase, thereby achieving the overall AI systems goal of successfully responding to user inputs and delivering upon the instructions received to the best of the system’s overall capability.

The limit on the number of input / output pairs during a single ‘chat’ with Microsoft Copilot is, in part, intended to reduce the likelihood of the interaction become saturated with irrelevant or erroneous information (whether as a result of user inputs, or LLM outputs) leading to a high ‘potential for surprise’ and negative unintended consequences such as erroneous or misaligned outputs, or the miscalibration of mental models on either side.

Graceful Extensibility and Brittleness

The concept of graceful extensibility is central to Copilot’s design. The system is built to extend its CfM gracefully when faced with surprises (S2) that challenge its base adaptive capacity. This is opposed to brittleness, where a system might experience a sudden drop in performance when overwhelmed. Copilot’s architecture allows for the expansion of its capabilities (up to and within constraints), ensuring a robust response to unexpected challenges.

The limit on the number of input / output pairs during a single ‘chat’ with Microsoft is again in part intended to manage the performance of the system and avoid ‘brittleness’ – for example, the loss of context by the LLM due to the length of an interaction exceeding the available context window leading to outputs that ‘forget’ information from earlier parts of the interaction, again leading to erroneous or misaligned outputs, or the miscalibration of mental models on either side.

Additionally, even with large context windows (such as those apparently employed within other AI systems such as Google Gemini Advanced, which is said to have a context window of 1.5 million tokens [for comparison, ChatGPT and the GPT-3.5 LLM was first released with a context window of approximately 3000 tokens]) longer ‘chats’ require a higher amount of computational resources (compute) to process and respond effectively to – leading to delays, or lag, in the interaction and a decline in the user experience and perception of the AI system.

Hence, the constraints on manoeuvre established by the AI systems developers represent various boundaries within which the system is able to maintain graceful extensibility, or sustained adaptability as TTOGE refers to such a system, without suffering brittle collapse.

Extending this further, while beyond the scope of this blog, we could use TTOGE to explore how Copilot’s design allows for scalability and flexibility in the face of unexpected challenges, further contrasting it with the notion of brittleness where a system might fail under pressure.

Human UABs in Copilot’s TLN

As has been intimated in preceding paragraphs it is crucial to recognize that human users and developers are integral UABs within Copilot’s TLN, as well as within other AI systems and the wider AI ecosystem. Their inputs drive the system’s actions, and their oversight ensures that the AI components function as intended.

The interplay between human and AI UABs creates a dynamic environment where both sets of actors adapt and learn from each other – for better, or for worse (the more such systems embody the principles of TTOGE, so much for the better).

V. The Graphic Art Tool: A UAB in Action

A prime example of a UAB within Copilot’s TLN is the graphic art tool. This component takes user inputs – processed and interpreted by language understanding models – and generates a creative prompt. This prompt then informs the graphic art tool to produce a visual output, exemplifying the transformation of human input into AI-generated content.

Illustrating the Flow of User Input Through Copilot’s TLN

Let’s consider, at a highly simplistic level, the journey of a user’s request as it navigates through Copilot’s network:

- A human user inputs a request for an image.

- The interface agents capture this input and pass it to the language models.

- The language models, acting as LLM UABs, interpret the request and formulate a creative prompt.

- This prompt is then relayed to the graphic art tool, another UAB, which generates the corresponding image.

- The resultant image is then delivered back through the system to the human user.

This process highlights the interdependent nature of UABs within the TLN and the crucial role of human interaction in initiating and guiding the system’s adaptive behaviours.

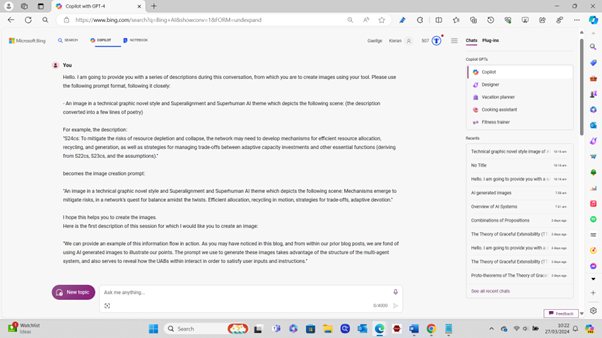

We can provide an example of this information flow in action. As you may have noticed in this blog, and from within our prior blog posts, we are fond of using AI generated images to illustrate our points. The prompt we use to generate these images takes advantage of the structure of the multi-agent system, and also serves to reveal how the UABs within interact in order to satisfy user inputs and instructions.

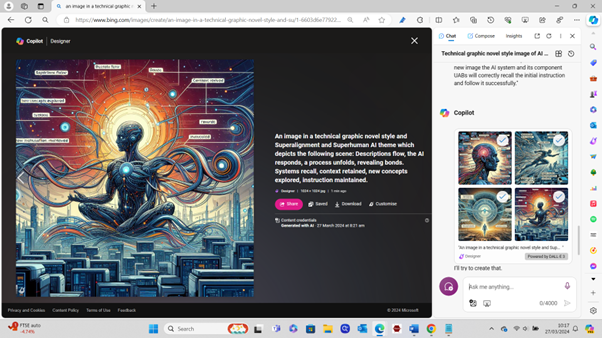

Here is the initial prompt used to commence an interaction with Microsoft Copilot where image generation is the main goal – specifically, the creation of creative images in a particular style and with a consistent theme directly from paragraphs of our blog post text:

Hello. I am going to provide you with a series of descriptions during this conversation, from which you are to create images using your tool. Please use the following prompt format, following it closely:

– An image in a technical graphic novel style and Superalignment and Superhuman AI theme which depicts the following scene: (the description converted into a few lines of poetry)

For example, the description:

“S24cs: To mitigate the risks of resource depletion and collapse, the network may need to develop mechanisms for efficient resource allocation, recycling, and generation, as well as strategies for managing trade-offs between adaptive capacity investments and other essential functions (deriving from S22cs, S23cs, and the assumptions).”

becomes the image creation prompt:

“An image in a technical graphic novel style and Superalignment and Superhuman AI theme which depicts the following scene: Mechanisms emerge to mitigate risks, in a network’s quest for balance amidst the twists. Efficient allocation, recycling in motion, strategies for trade-offs, adaptive devotion.”

I hope this helps you to create the images.

Here is the first description of this session for which I would like you to create an image:

“We can provide an example of this information flow in action. As you may have noticed in this blog, and from within our prior blog posts, we are fond of using AI generated images to illustrate our points. The prompt we use to generate these images takes advantage of the structure of the multi-agent system, and also serves to reveal how the UABs within interact in order to satisfy user inputs and instructions.”

As described previously the interface agents capture this input and pass it to the language models. The language models, acting as LLM UABs, interpret the request and formulate a creative prompt.

We use the plural deliberately to make it clear that there are multiple LLM agents at work within Copilots system – in this example, one is creating the image generation prompt and one is responding directly to the User input with the simple output:

I’ll try to create that.

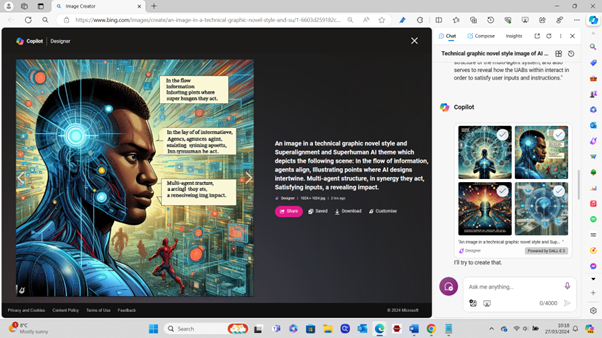

Another LLM agent follows the user instruction resulting in the following prompt being sent to the image creation tool (another UAB within the AI system, this one utilising the DALL.E 3 AI model).

An image in a technical graphic novel style and Superalignment and Superhuman AI theme which depicts the following scene: In the flow of information, agents align, Illustrating points where AI designs intertwine. Multi-agent structure, in synergy they act, Satisfying inputs, a revealing impact.

A screenshot of the prompt and the resulting images is also shown above. Within the prompt that was used by the graphic art tool UAB to generate the image you can see that an LLM UAB has closely followed the user instruction and created a short poem based on the blog text extract that was provided. This is an example of the autonomous behaviour that goes on within the AI system – however, it is important to note that this autonomous cycle starts with the initial user input and ends once the resulting images are delivered back to the user via the interface agents.

As the interaction continues only descriptions are provided by the user to the AI system, and in response to each one the same process unfolds – this also serves to nicely demonstrate the AI systems, and it’s constituent UABS, ability to recall the original instruction and use it as context through which to continue to respond to user inputs. Interestingly, the conversation can be diverted to explore other concepts or leverage other tools – but as soon as a request is made for a new image the AI system and its component UABs will correctly recall the initial instruction and follow it successfully.

Here is the subsequent text prompt provided to Copilot, followed by the resulting image creation prompt (generated by the AI system) and images:

Thank you. Please create an image for:

“As the interaction continues only descriptions are provided by the user to the AI system, and in response to each one the same process unfolds – this also serves to nicely demonstrate the AI systems, and it’s constituent UABS, ability to recall the original instruction and use it as context through which to continue to respond to user inputs. Interestingly, the conversation can be diverted to explore other concepts or leverage other tools – but as soon as a request is made for a new image the AI system and its component UABs will correctly recall the initial instruction and follow it successfully.”

System generated image creation prompt:

An image in a technical graphic novel style and Superalignment and Superhuman AI theme which depicts the following scene: Descriptions flow, the AI responds, a process unfolds, revealing bonds. Systems recall, context retained, new concepts explored, instruction maintained.

VI. Microsoft Copilot: An Unintended Reflection of TTOGE Principles

Microsoft Copilot serves as a compelling case study in how multi-agent AI systems (MAS) operate within Tangled Layered Networks (TLNs). Its various AI components, acting as Units of Adaptive Behaviour (UABs), collaborate to deliver an intelligent and adaptable user experience. This collaboration exemplifies TTOGE principles like finite resources, responding to surprises, managing saturation, and local perspectives.

While not intentionally designed with TTOGE in mind, Copilot’s ability to gracefully extend its capabilities through user interaction and UAB coordination aligns perfectly with the theory’s emphasis on sustained adaptability. The concept of Capacity for Manoeuvre (CfM) is evident in Copilot’s ability to navigate user queries and select the most appropriate response based on context. Additionally, the limitations on user interactions play a crucial role in maintaining graceful extensibility and avoiding brittleness by ensuring the system operates within manageable bounds.

The human element within Copilot is equally important. Human users and developers act as crucial UABs, providing input, oversight, and shaping the system’s learning process. This highlights the dynamic interplay between human and AI agents, fostering a mutually beneficial environment for adaptation.

The dynamic interplay between human users and Copilot’s AI components further underscores the importance of human-in-the-loop approaches in AI development. This collaboration fosters a mutually beneficial learning environment, shaping the future of AI systems that are not only powerful but also adaptable and user-centric.

This exploration of Copilot through the lens of TTOGE has hopefully provided valuable insights. Throughout this blog series, we’ll delve deeper into specific functionalities and capabilities, showcasing how TTOGE principles manifest in real-world applications of AI systems. We invite you to experiment with the image creation prompts we’ve shared and explore our previous and future posts to gain a comprehensive understanding of LLMs, TTOGE, and their combined influence on the future of AI.

Leave a comment