I. Hot and Cold Running Order: Why Perfect Sustainability is a Myth

Sustainable development has emerged as a key global endeavour, seeking to harmonise human progress with the preservation of our finite resources and fragile environment. Yet, as we delve into the fundamental principles of thermodynamics, a sobering realization dawns – true sustainability, in its purest form, is an unattainable ideal due to the inexorable laws that govern the universe.

This blog explores these inviolable laws, commencing with the bedrock concepts of enthalpy and entropy. Through the lens of first principles thinking, we deconstruct these ideas to their core, paving the way for a profound exploration of the arrow of time – that unidirectional progression from past to future, manifested in the relentless rise of entropy.

As we navigate the intricate interplay between enthalpy and the arrow of time, we uncover the realization that while enthalpy changes are integral to thermodynamic processes, they do not dictate temporal directionality. This then expands to encompass the diverse natures of systems – open, closed, and isolated – and their intrinsic roles in shaping entropy dynamics and energy exchange.

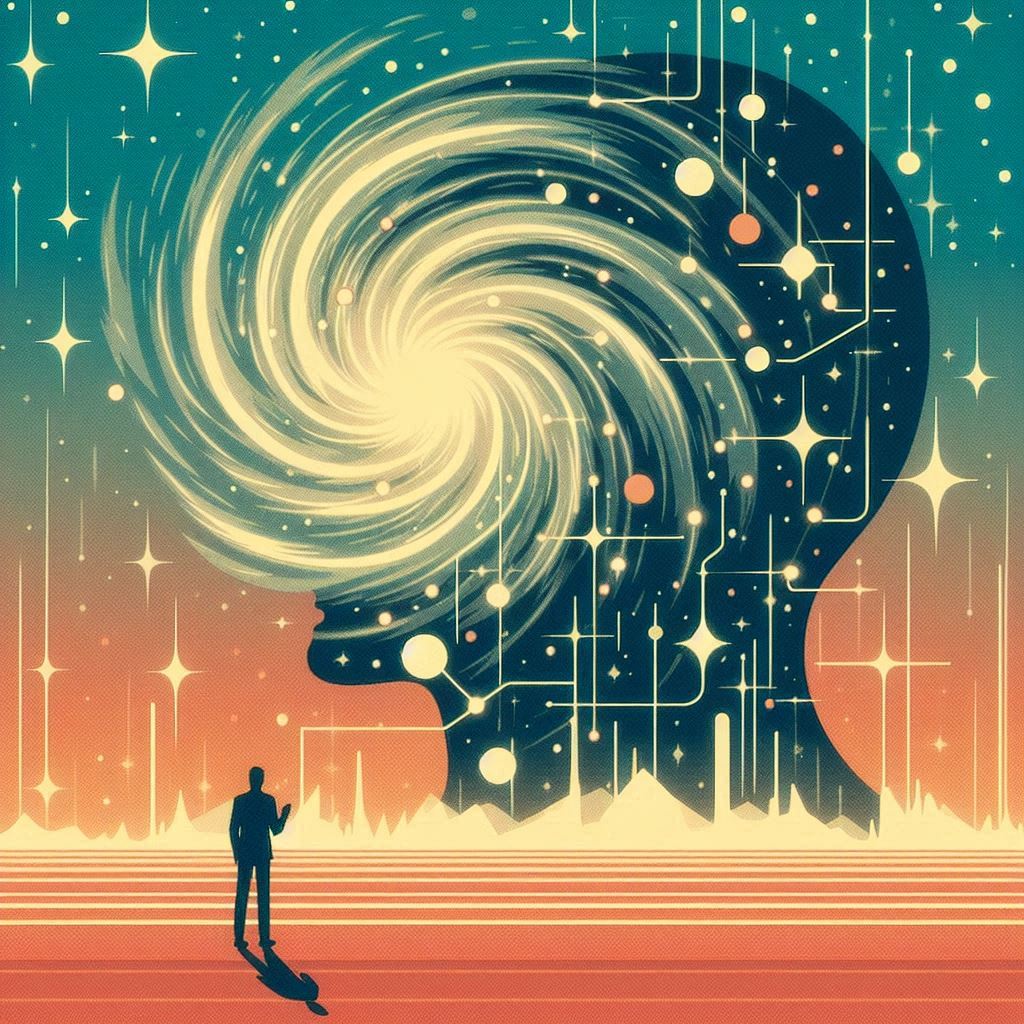

Venturing further, we encounter the tantalizing notion of negentropy – a measure of order amidst disorder, a concept woven through the tapestry of life and human ingenuity. We explore how technologies like the Passive House standard and artificial intelligence embody negentropy, creating ephemeral pockets of order that defy the universal trend of increasing entropy.

The Passive House philosophy is explored to illuminate the design philosophies potential to transiently maintain order and curb entropy within system boundaries. This paradigm catalyses a broader discourse on sustainability – the pragmatic endeavour to temper entropy’s rise while navigating the inescapable constraints imposed by the second law of thermodynamics.

We’ll contemplate the necessity of growth for the maintenance of our pseudo-equilibrium, which demands the perpetual influx of new resources and energy into our ‘world system’ – a finite and ever-changing domain. This understanding compels us to revisit the laws of thermodynamics, reframing them through the prism of Gibbs free energy and equilibrium across the spectrum of system types.

Our explorations then turn to the concepts of system-of-systems, microstates, and macrostates, unveiling their intricate interplay with Gibbs free energy and negentropy. We then forge connections to the discipline of physical asset management, perceiving asset portfolios as complex system-of-systems that necessitate strategic stewardship to optimize efficiency and longevity within the limits of available resources.

Each concept builds upon the previous, elucidating the intricate interactions and management of systems in a paradigm that acknowledges the universe’s immutable laws while striving for sustainable practices.

Through this odyssey, we aim to synthesize the realms of thermodynamics, systems theory, and sustainability, distilling a nuanced understanding of how these principles shape the design, management, and resilience of systems. While the platonic ideal of perpetual sustainability may forever elude our grasp, this exploration empowers us to pursue that aspiration as effectively as possible within the ever-changing constraints of a finite world governed by entropy’s ascendancy.

II. Applying First Principles to Enthalpy

First principles thinking involves breaking down complex concepts into their most fundamental parts and then reconstructing them from the ground up. It’s about understanding a concept so deeply that you can recreate the reasoning that leads to it. In this section, we will apply this method to the concept of enthalpy.

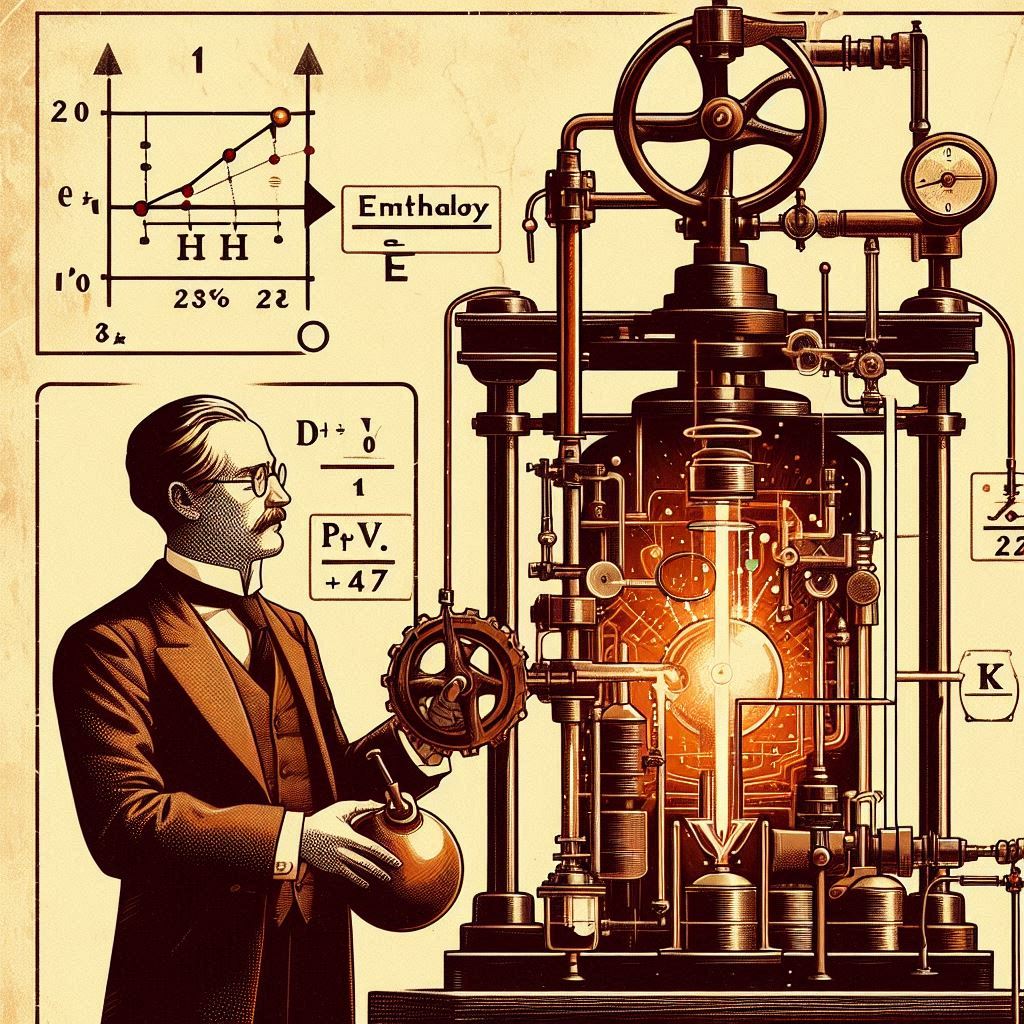

Enthalpy, denoted as H, is a thermodynamic quantity that represents the total heat content of a system. It’s defined as the sum of the system’s internal energy E and the product of its pressure P and volume V:

H = E + PV

This equation tells us that enthalpy is not just about the energy contained within the system due to molecular interactions (internal energy) but also includes the work needed to make space for the system in its environment by displacing its surroundings with pressure and volume.

Now, let’s break this down further:

Internal Energy (E): This is the energy related to the motion and arrangement of molecules within the system. It includes kinetic energy, potential energy, chemical energy, etc.

Pressure (P): This is the force exerted by the system on its surroundings per unit area. It’s a measure of how much the system’s molecules are pushing against the boundaries of the container.

Volume (V): This is the space that the system occupies. It’s related to the size of the container and how much space the molecules of the system need.

When we talk about changes in enthalpy (ΔH), we’re concerned with the heat absorbed or released during a process at constant pressure. This is because enthalpy is a state function, meaning its value depends only on the current state of the system, not the path taken to get there.

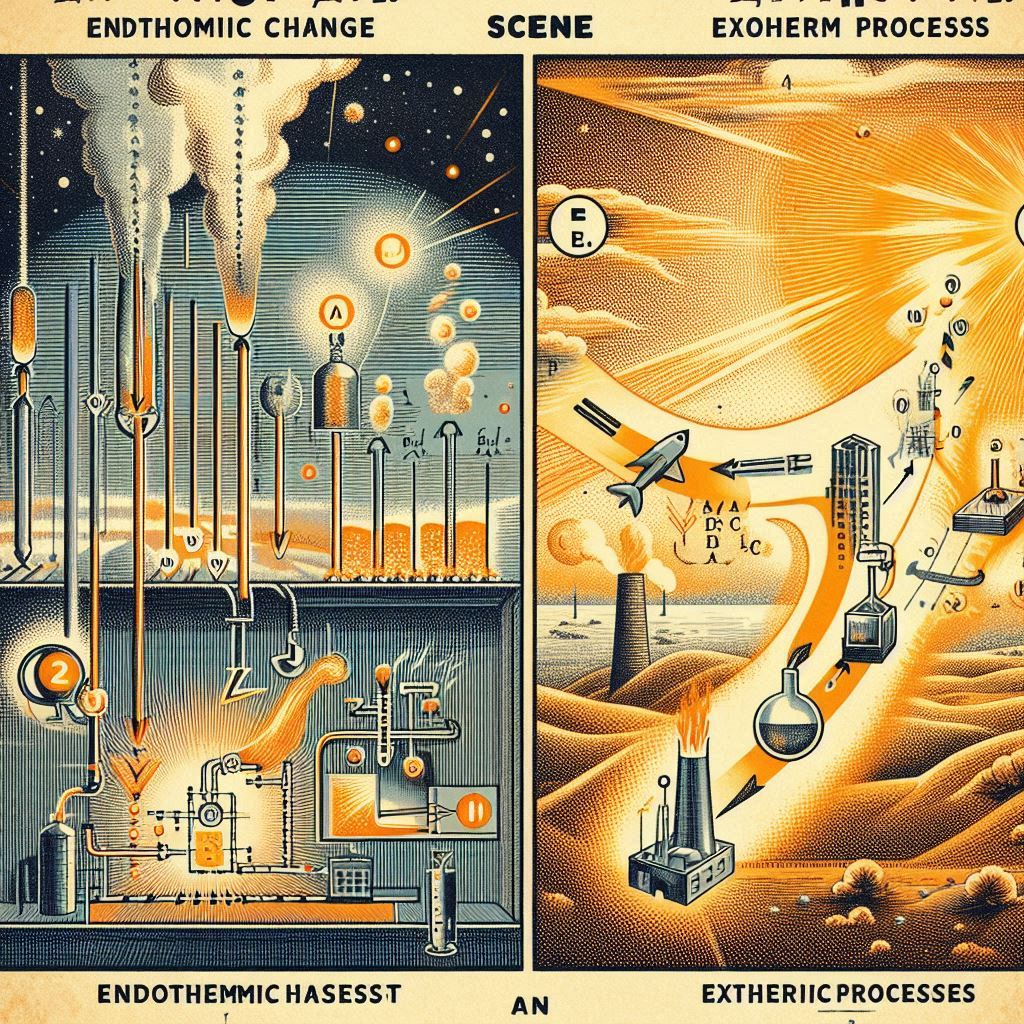

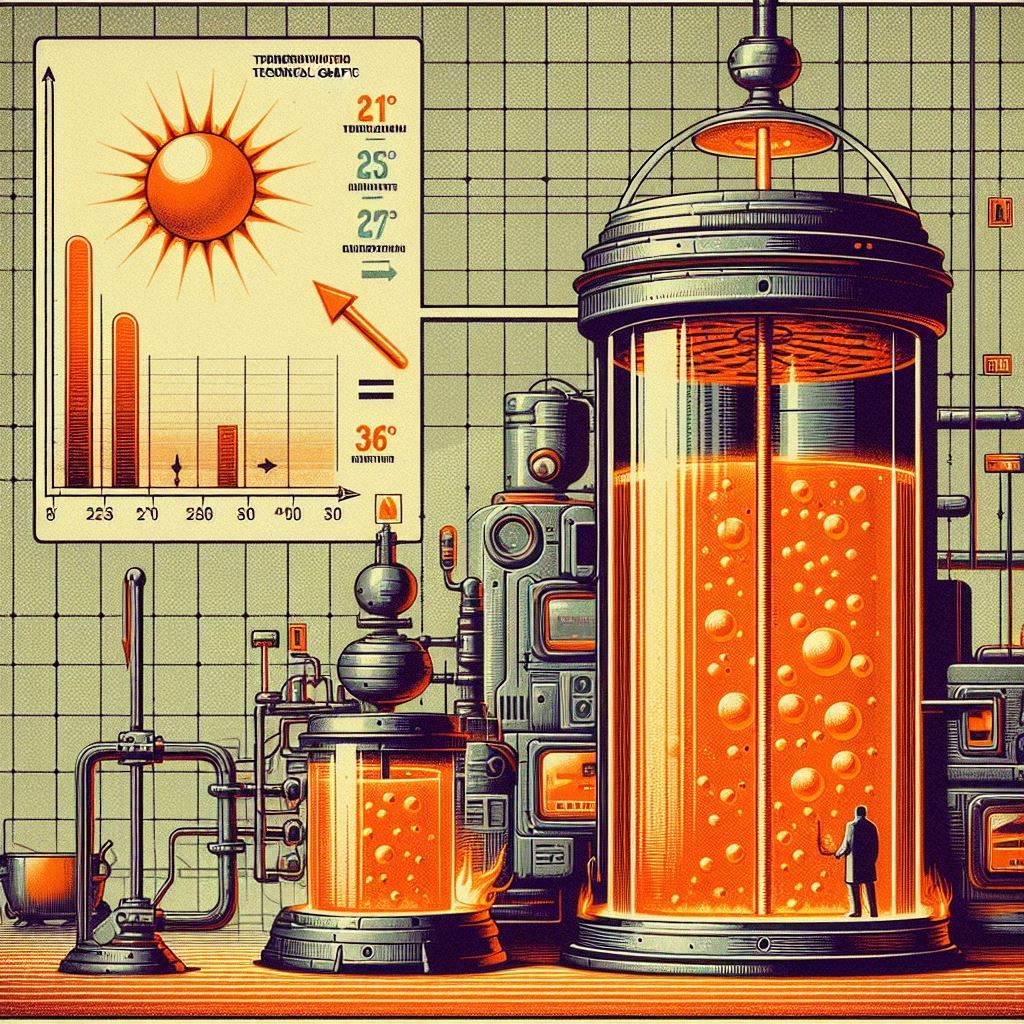

In practical terms, if you have a chemical reaction happening in a beaker at atmospheric pressure, the change in enthalpy tells you how much heat was absorbed from or released into the surroundings. If the reaction absorbs heat, it’s endothermic (ΔH>0), and if it releases heat, it’s exothermic (ΔH<0).

By understanding these fundamental components, we can see that enthalpy is a way to quantify the energy changes that occur during physical processes and chemical reactions, especially in terms of heat exchange with the surroundings. This deep understanding allows us to predict how a system will behave under different conditions, which is essential for fields like chemistry, physics, and engineering.

Here are the fundamental truths of enthalpy:

State Function: Enthalpy is a state function, meaning it depends only on the current state of the system, not the path taken to reach that state.

Heat Content: It represents the total heat content of a system at constant pressure, encompassing both the internal energy and the energy required to displace the system’s environment.

Internal Energy and Pressure-Volume Work: Enthalpy combines the system’s internal energy with the work done by the system due to pressure and volume changes (H = E + PV).

Changes in Enthalpy: Changes in enthalpy (ΔH) reflect the heat absorbed or released during a process at constant pressure, which is crucial for understanding chemical reactions and phase changes.

Conservation: Although enthalpy itself can change, the total enthalpy of an isolated system is conserved in accordance with the first law of thermodynamics.

Predictive Power: Knowing the enthalpy changes in chemical reactions allows us to predict whether a reaction is endothermic (ΔH>0) or exothermic (ΔH<0), which is essential for chemical engineering and thermodynamics.

These truths form the basis for understanding how enthalpy is used to describe energy changes in physical and chemical processes, particularly in relation to heat transfer.

III. Applying First Principles to Entropy

Applying first principles thinking to the concept of entropy means breaking it down to its most fundamental truths and then building up an understanding from there. Entropy is a measure of the disorder or randomness in a system. It’s a central concept in the second law of thermodynamics, which states that the entropy of an isolated system will tend to increase over time.

Let’s dissect this further:

Fundamental Definition: Entropy, symbolized as S, quantifies the number of ways a system can be arranged, often leading to a state of disorder or randomness. It’s a statistical measure of uncertainty or unpredictability.

Microstates and Macrostates: At a microscopic level, entropy relates to the number of microstates (specific ways particles can be arranged) that correspond to a macrostate (the overall state of the system). The greater the number of microstates, the higher the entropy.

Thermodynamic Processes: In thermodynamics, entropy is a state function, meaning it depends only on the current state of the system, not on how it got there. It’s associated with the energy in a system that is not available to do work.

Irreversibility: The increase in entropy also represents the irreversibility of natural processes. Energy transformations are not 100% efficient, and some energy is always dispersed in a form that cannot be used to do work.

Information Theory: Entropy also has a definition in information theory, where it measures the amount of information that is missing before reception and is sometimes referred to as Shannon entropy.

By understanding these fundamental aspects, we can see that entropy is not just about disorder but is a profound concept that describes the intrinsic limitations of energy transformations, the directionality of time, and the flow of information. It’s a bridge between the microscopic world of atoms and molecules and the macroscopic world we observe, providing a deep understanding of why certain processes occur spontaneously and others do not. This understanding is crucial for fields ranging from physics and chemistry to information theory and even economics.

Here are the fundamental truths of entropy:

Measure of Disorder: Entropy is a measure of the disorder or randomness within a system. It quantifies the number of microscopic configurations that correspond to a thermodynamic system’s macroscopic state.

Second Law of Thermodynamics: Entropy is central to the second law of thermodynamics, which states that the total entropy of an isolated system can never decrease over time. This implies that processes occur in a direction that increases entropy.

State Function: Like enthalpy, entropy is a state function. Its value is determined by the state of the system and is independent of the process by which the system arrived at that state.

Unavailability of Energy: Entropy is associated with the portion of a system’s energy that is not available to do work. As entropy increases, the amount of usable energy decreases.

Irreversibility: The concept of entropy is tied to the irreversibility of natural processes. Once entropy increases, it requires external work to return the system to its original state.

Statistical Mechanics: From a statistical mechanics perspective, entropy reflects the number of ways particles can be arranged, known as microstates, while still satisfying the macroscopic quantities like temperature and pressure.

Information Theory: In information theory, entropy measures the uncertainty involved in predicting the value of a random variable. This concept is analogous to the idea of unpredictability in thermodynamic systems.

These truths help us understand the inherent directionality of time and the flow of energy in the universe, providing a framework for predicting the behaviour of physical systems.

IV. Entropy and the Arrow of Time

Entropy is deeply related to the concept of the arrow of time, which is the directionality of time from the past to the future. This relationship is rooted in the second law of thermodynamics, which states that the entropy of an isolated system will not decrease over time. Here’s how entropy relates to the arrow of time:

Irreversibility: Entropy increases in spontaneous processes, making them irreversible. This irreversibility gives time a direction, as we can infer the ‘past’ from the ‘future’ based on the state of entropy.

Macroscopic Predictability: While the fundamental laws of physics are time-symmetric (they don’t distinguish between past and future), entropy adds a direction to time because macroscopic processes tend to move towards a state of higher entropy.

Microscopic vs. Macroscopic: At a microscopic level, individual particle interactions are time-symmetric, but at a macroscopic level, the collective behaviour of particles tends to increase entropy, thus indicating a forward progression of time.

Memory and Information: Our memory works in a way that correlates with increasing entropy. We remember the past and not the future because the past is associated with lower entropy states.

Cosmological Implications: The arrow of time also has implications in cosmology, where the universe’s expansion and the direction of time are considered in relation to entropy.

In essence, entropy provides a thermodynamic arrow of time, distinguishing the past (lower entropy) from the future (higher entropy) and giving us a sense of the flow of time in our observable universe. It’s one of the few physical quantities that require a particular direction for time, often referred to as the “arrow of time” because it aligns with our everyday experiences of aging, decay, and the one-way direction of natural processes.

Enthalpy itself does not directly dictate the arrow of time as entropy does. The concept of the arrow of time is primarily associated with the second law of thermodynamics and the increase of entropy in an isolated system. However, enthalpy changes can be related to the arrow of time in the context of energy transfer and chemical reactions.

Here’s how enthalpy relates to the arrow of time:

Direction of Processes: Enthalpy changes indicate whether a process is endothermic or exothermic. While this doesn’t establish an arrow of time, it does show the direction in which energy is transferred – either absorbed from or released into the surroundings.

Irreversible Reactions: Many chemical reactions are practically irreversible, proceeding in one direction until the reactants are consumed. The change in enthalpy during such reactions can be a part of the overall increase in entropy, thus indirectly contributing to the arrow of time.

Equilibrium and Spontaneity: The concept of Gibbs free energy, which combines enthalpy, entropy, and temperature, determines the spontaneity of a process. While entropy is the driving force for the directionality of time, enthalpy is a component of the energy changes that occur as a system moves towards equilibrium.

In summary, while enthalpy changes are not a direct measure of the arrow of time, they are part of the broader thermodynamic processes that unfold in a direction consistent with the increase of entropy and the forward progression of time. Enthalpy changes reflect the energy dynamics within these processes, which, when combined with entropy changes, contribute to the overall directionality of time as we experience it.

V. System Types and the Arrow of Time

The nature of the system – whether it’s open, closed, or isolated – plays a significant role in how entropy behaves and relates to the arrow of time:

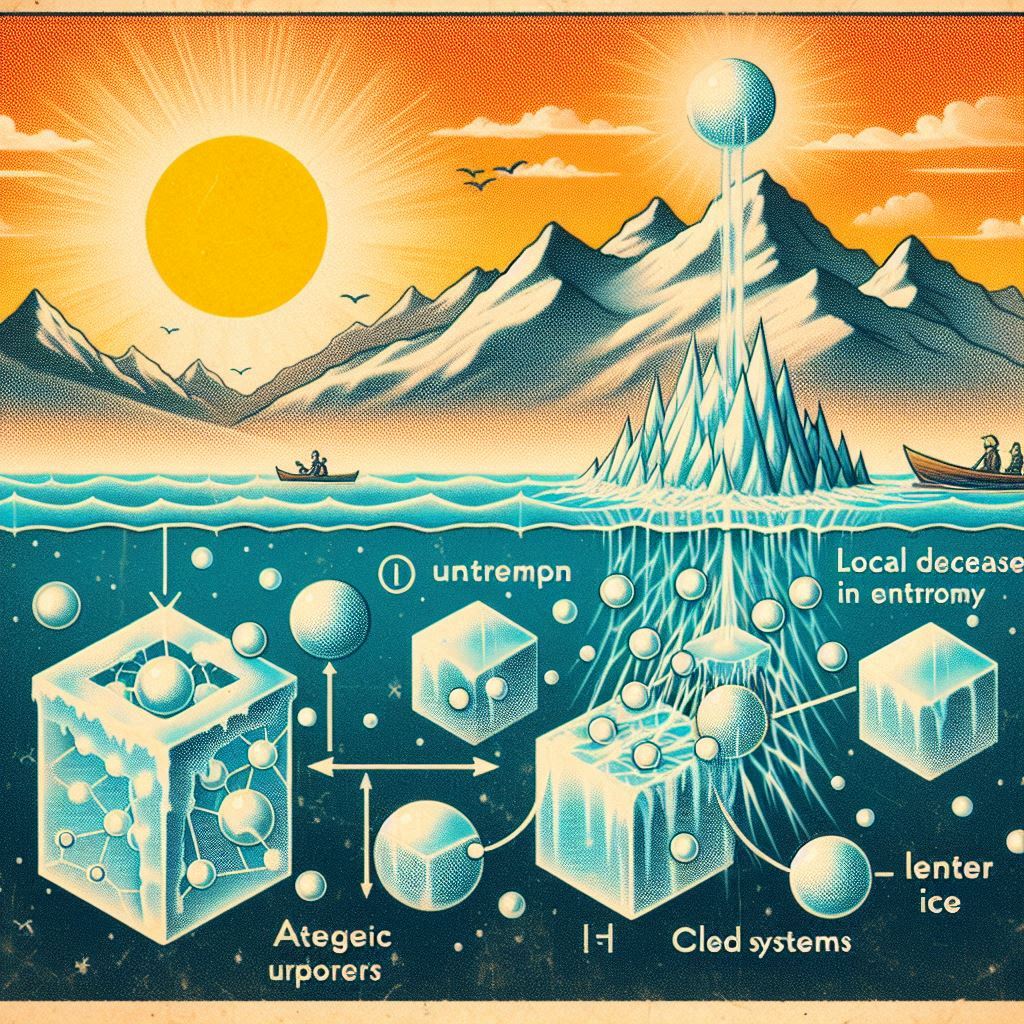

Open Systems: These systems can exchange both energy and matter with their surroundings. In open systems, the entropy can decrease locally because the system is receiving low-entropy energy or matter from the outside or expelling high-entropy energy or matter. However, the overall entropy of the system plus its surroundings will increase, in line with the second law of thermodynamics.

Closed Systems: Closed systems can exchange energy but not matter with their surroundings. The entropy of a closed system can also decrease locally, for example, when a substance within the system freezes into a crystal, becoming more ordered. However, this local decrease is always accompanied by a greater increase in the entropy of the surroundings, so the total entropy of the system plus surroundings does not decrease.

Isolated Systems: Isolated systems cannot exchange energy or matter with their surroundings. The entropy of an isolated system will tend to increase over time, reaching a maximum at thermodynamic equilibrium. This is the classic case where the second law of thermodynamics dictates the arrow of time, as the system evolves in a way that increases entropy, reflecting the irreversible passage of time.

In summary, while local decreases in entropy can occur in open and closed systems due to exchanges with the surroundings, the total entropy of the system plus its surroundings will always increase or remain the same. This universal tendency for entropy to increase in isolated systems is what gives us the thermodynamic arrow of time, pointing from the past (lower entropy) to the future (higher entropy).

The concept of negative entropy changes, often referred to as a decrease in entropy, can seem counterintuitive since the second law of thermodynamics states that the entropy of an isolated system tends to increase over time. However, negative entropy changes are possible under certain conditions, particularly in open and closed systems.

Here’s a breakdown of how negative entropy changes can occur:

Local Decreases in Entropy: In open and closed systems, entropy can decrease locally. For example, when water freezes into ice, the molecules become more ordered, and the entropy of the water decreases. This is a negative change in entropy for the system.

Energy Exchange: Negative entropy changes in a system are often accompanied by an exchange of energy with the surroundings. In the case of freezing water, the heat released into the surroundings increases the surroundings’ entropy, ensuring that the total entropy change (system plus surroundings) is non-negative.

Negentropy: The term “negentropy” has been used to describe situations where systems become more ordered. It measures the difference in entropy between a given distribution and the Gaussian distribution with the same mean and variance. Negentropy is always nonnegative and is used in fields like information theory to describe systems that maintain or increase order.

Entropy and Life: Living organisms are often cited as examples of systems that maintain low entropy or negentropy. Through metabolism, organisms import low-entropy energy (in the form of food or sunlight) and export high-entropy waste, maintaining a state of low entropy.

Entropy Change Formula: The change in entropy (ΔS) can be calculated using the formula ΔS = Q/T , where Q is the heat exchanged and T is the temperature. If Q is negative (heat is released by the system), then ΔS will be negative, indicating a decrease in entropy for the system.

In summary, while the overall entropy of the universe tends to increase, local decreases in entropy are possible and are a fundamental aspect of many natural processes, including the formation of complex structures and the existence of life itself.

VI. Negentropy and Its Implications

Negentropy, also known as negative entropy, is a concept that measures the degree of order or organization in a system, as opposed to the disorder measured by entropy. The term was introduced by Erwin Schrödinger in his 1944 book “What is Life?” and later popularized by other scientists. Here’s a detailed explanation of negentropy:

Statistical Measure: Negentropy is used in information theory and statistics as a measure of distance to normality. It quantifies how much a system deviates from the maximum entropy state, which is often represented by a Gaussian distribution.

Order and Organization: In a broader sense, negentropy refers to the tendency of systems to become more ordered and structured. This is the opposite of entropy, which tends toward increased disorder and randomness.

Life and Complexity: Negentropy is often associated with living systems, which maintain a state of low entropy by importing low-entropy materials (like food) and exporting high-entropy waste. This process allows organisms to maintain order and complexity, which is essential for life.

Energy Transfer: In thermodynamics, negentropy can be related to the quality of energy in a system. High-quality energy, such as electrical energy, has low entropy (high negentropy), while low-quality energy, like heat, has high entropy (low negentropy).

Information Theory: In the context of information theory, negentropy measures the amount of information or predictability in a system. A system with high negentropy has less uncertainty and more predictability.

Relation to Free Energy: Negentropy is sometimes correlated with Gibbs’ free energy, which is a measure of the amount of work that can be extracted from a system. Systems with high negentropy are often those from which work can be extracted more efficiently.

Mathematical Definition: Mathematically, negentropy is defined as the difference in entropy between a given distribution and the Gaussian distribution with the same mean and variance. It is always nonnegative and vanishes if and only if the signal is Gaussian.

Negentropy is a fundamental concept that helps us understand the organization, complexity, and energy dynamics of systems, particularly living systems and their ability to maintain order in a universe that naturally tends toward disorder. It’s a key idea in fields ranging from thermodynamics to information theory, biology, and even the study of social systems.

Negentropy, or negative entropy, is a concept that is intimately connected to the concept of life. It refers to the process by which living systems reduce their internal entropy at the expense of energy taken from their environment, which is often in a more ordered state. Here’s how negentropy relates to life:

Order from Disorder: Life is characterized by its ability to create order out of disorder. Living organisms take in low-entropy substances like food and sunlight and convert them into high-entropy waste, all while maintaining and increasing their internal order.

Energy Utilization: Living systems are adept at capturing energy from their environment and using it to maintain and build complex structures, such as cells, tissues, and organs. This process of energy conversion and utilization is a manifestation of negentropy.

Thermodynamic Balance: While the second law of thermodynamics states that the total entropy of an isolated system tends to increase, living systems are not isolated. They exchange energy and matter with their surroundings, which allows them to maintain or decrease their internal entropy.

Evolutionary Perspective: From an evolutionary standpoint, negentropy can be seen as a driving force for the origin and evolution of life. Organisms that are better at capturing and utilizing energy to create order are more likely to survive and reproduce.

Information Storage: Life stores genetic information in the form of DNA, which is a highly ordered structure. The maintenance and replication of this information are processes that involve negentropy, as they create order and reduce uncertainty.

In summary, negentropy is a fundamental aspect of life that describes how living systems maintain their structure, function, and complexity in a universe that naturally tends toward increased entropy and disorder. It’s a key concept in understanding the physical principles underlying the emergence and sustainability of life.

VII. Negentropy in Human Creations

When humans create machines, buildings, technology, or even AI, they are engaging in the creation of systems that exhibit negentropy. These creations are examples of organizing energy and resources into structured and ordered forms, which is the essence of negentropy. Here’s how these human creations relate to negentropy:

Machines: Machines convert energy from one form to another and perform work. The design and operation of machines involve organizing components in a way that maximizes efficiency and minimizes entropy.

Buildings: Architectural design is a process of ordering materials into a coherent structure that serves a purpose, whether it’s for living, working, or any other function. Buildings are negentropic in that they create an ordered environment from the raw materials of nature.

Technology: Technological advancements often involve the development of more efficient ways to process information and energy. As technology progresses, it tends to move towards systems that are more ordered and less entropic.

Artificial Intelligence (AI): AI systems organize data and algorithms to perform tasks that would require intelligence if done by humans. The creation of AI involves structuring information in a way that reduces uncertainty and increases predictability, which is a form of negentropy.

In all these instances, humans are using their knowledge and skills to reduce entropy within the system they are creating, even though the overall entropy of the universe still increases according to the second law of thermodynamics. This ability to create order from disorder is a defining characteristic of life and human activity. It’s a reflection of the inherent drive towards complexity and organization that can be observed throughout nature and human endeavours.

One example of a technology that maximizes negentropy in its operation is the ‘Passivhaus’, or Passive House, standard in building construction. This design philosophy focuses on creating buildings that have an exceptionally low energy demand for heating and cooling, which is achieved through meticulous design and construction techniques. Here’s how it maximizes negentropy:

Insulation: Passive Houses are designed with super-insulation to keep the desired temperature inside, reducing the need for external energy input for heating or cooling.

Air Tightness: They are virtually airtight, preventing uncontrolled loss of heated or cooled air, which is a form of energy loss.

Heat Recovery: These buildings often include a heat recovery system that recycles the heat from the exhaust air to warm the incoming fresh air, thus conserving energy.

Positioning and Design: The positioning of windows and the overall design of the building are optimized to make the most of the sun’s energy for natural heating and lighting.

By minimizing energy loss and maximizing the use of natural and recycled energy, Passive House buildings exemplify a technology that operates with high negentropy, creating an ordered and efficient system that reduces the entropy associated with energy use.

When a technology like the Passive House standard reduces entropy within a system, it does so by making the system more ordered and efficient. However, this local decrease in entropy is always accompanied by an increase in entropy elsewhere, ensuring that the overall entropy of the universe still increases in accordance with the second law of thermodynamics.

Here’s how it works:

Energy Input: The construction and operation of such a building require energy, which comes from external sources. The production and use of this energy contribute to an increase in entropy outside the system.

Material Use: The materials used in construction are processed and transported, which involves energy expenditure and entropy increase in the environment.

Heat Exchange: Even highly efficient systems like Passive Houses will still lose some heat to the environment, which increases the entropy of the surroundings.

Overall Balance: While the building itself may have low entropy, the energy and resources used to create and maintain it result in a net increase in entropy when considering the larger system that includes the building’s environment and energy sources.

In essence, any local decrease in entropy is offset by a greater increase in entropy elsewhere, ensuring that the total entropy of the universe continues to rise. This is a fundamental principle of thermodynamics and reflects the irreversible nature of real-world processes. The Passive House standard minimizes the entropy increase by maximizing efficiency, but it cannot eliminate it entirely.

VIII. Sustainability and Entropy Management

Sustainability, in the context of environmental science and engineering, is about minimizing the rate at which entropy increases within a system or process over a certain period. It’s an effort to create systems that are as efficient and waste-minimizing as possible, acknowledging that true sustainability – where no entropy would increase at all – is not achievable due to the second law of thermodynamics.

Here’s a more detailed look at sustainability in terms of entropy:

Efficiency: By increasing the efficiency of energy and resource use, we can reduce the amount of waste and, consequently, the rate of entropy increase.

Renewable Resources: Utilizing renewable resources, which can be replenished naturally, helps to maintain a balance and delays the depletion of resources and the associated increase in entropy.

Recycling: Recycling materials allows us to reuse them, which can be seen as a form of negentropy because it imposes order on what would otherwise be waste.

Innovation: Developing new technologies and processes that are less energy-intensive or that can capture and reuse waste energy contributes to slowing the increase of entropy.

Temporal Horizon: The concept of a temporal horizon acknowledges that we are looking to sustain our systems for as long as possible, but there is an understanding that eventually, all systems will reach a state of maximum entropy.

In essence, sustainability efforts are about managing the inevitable increase in entropy in a way that supports the continued existence and health of ecological and human-made systems for as long as possible.

For growth or to maintain a stable state of equilibrium, whether dynamic or static, new resources and/or energy must continually be introduced into the ‘world system’. This is because all systems tend to move towards a state of higher entropy over time, as dictated by the second law of thermodynamics. Here’s how this principle applies:

Growth: For any system to grow, it must have a supply of energy and resources that exceeds what is needed to maintain its current state. This surplus allows for the creation of new structures, processes, or organisms.

Dynamic Equilibrium: Even to maintain a dynamic equilibrium, where the system appears stable but is actually in a constant state of flux, there must be a continuous flow of energy and resources through the system. This is because some energy is always lost as waste heat due to inefficiencies in the system’s processes.

Static Equilibrium: In theory, a static equilibrium would require no input of energy or resources because nothing changes. However, in practice, even maintaining a static state requires energy to counteract the natural tendency towards disorder.

World System: When we talk about the ‘world system’, we’re referring to the global ecosystem, which includes human societies. For this system to sustain itself and thrive, it must have a continuous input of energy from the sun and resources from the earth.

In summary, the introduction of new resources and/or energy is essential for the maintenance and growth of any system within the universe. This principle underlies the concept of sustainability, which aims to manage resource and energy flows in a way that can support the long-term health and stability of our world system.

IX. The Laws of Thermodynamics Revisited

The laws of thermodynamics are fundamental principles that describe how energy and entropy behave within physical systems. Here’s an explanation of each law through the lenses of our exploration:

Zeroth Law of Thermodynamics:

Statement: If two systems are each in thermal equilibrium with a third system, then they are in thermal equilibrium with each other.

Explanation: This law allows us to define temperature and establish a temperature scale. It’s the basis for the concept of thermal equilibrium, where no net heat flow occurs between objects at the same temperature.

First Law of Thermodynamics (Law of Energy Conservation):

Statement: Energy cannot be created or destroyed in an isolated system; it can only be transformed from one form to another.

Explanation: This law is a statement of the conservation of energy. It underpins the concept of enthalpy, as changes in enthalpy reflect the heat absorbed or released in a process at constant pressure, which is a form of energy transfer.

Second Law of Thermodynamics:

Statement: The total entropy of an isolated system can never decrease over time, and is constant if and only if all processes are reversible.

Explanation: This law introduces the arrow of time and explains why certain processes are irreversible. It’s the foundation for understanding entropy and negentropy, as it dictates that the universe tends toward a state of maximum entropy, although local decreases in entropy (negentropy) can occur with energy input.

Third Law of Thermodynamics:

Statement: The entropy of a perfect crystal at absolute zero temperature is zero.

Explanation: This law sets a reference point for the measure of entropy. It implies that it’s impossible to reach absolute zero temperature in a finite number of steps because doing so would require an infinite number of steps to remove all thermal energy, which is associated with entropy.

Through these laws, we understand the flow of energy, the inevitability of increasing disorder, and the conditions under which order can be maintained or created within the universe. They are crucial for grasping the principles of sustainability and the challenges of creating systems that minimize the increase of entropy over time.

The laws of thermodynamics provide a foundation for understanding the concept of Gibbs free energy, which is a powerful tool for analysing the spontaneity and feasibility of chemical reactions and processes. Gibbs free energy is a thermodynamic quantity that combines the principles of energy conservation and entropy change to determine whether a system can spontaneously perform work or not. It is defined as the difference between the enthalpy (total energy) of a system and the product of its entropy and absolute temperature.

Applying first principles thinking with a systems thinking mindset to the concept of Gibbs free energy involves breaking down the laws of thermodynamics to their fundamental truths and then understanding how these laws interact within a system to give rise to the concept of Gibbs free energy. Here’s how they relate:

First Law of Thermodynamics (Conservation of Energy):

Fundamental Truth: Energy cannot be created or destroyed; it can only change forms.

Systems Perspective: In any system, the total energy (enthalpy) is conserved. When a chemical reaction occurs, energy is either absorbed from or released into the surroundings.

Second Law of Thermodynamics (Entropy Increase):

Fundamental Truth: The entropy of an isolated system tends to increase over time.

Systems Perspective: Systems naturally evolve towards a state of maximum entropy. This law predicts the direction of spontaneous processes and is crucial for understanding the feasibility of reactions within a system.

Third Law of Thermodynamics (Absolute Zero Entropy):

Fundamental Truth: The entropy of a perfect crystal at absolute zero is zero.

Systems Perspective: This law provides a reference point for entropy values and implies that it’s impossible to remove all thermal energy from a system.

Now, let’s connect these laws to Gibbs free energy:

Gibbs Free Energy (G):

Definition: Gibbs free energy is the energy associated with a chemical reaction that can be used to do work at constant temperature and pressure. It’s defined as G = H − TS, where H is enthalpy, T is temperature, and S is entropy.

First Principles and Systems Thinking: By considering the first and second laws, we can deduce that Gibbs free energy combines the concepts of energy conservation and entropy increase to determine the spontaneity of a process within a system. It tells us whether a process will occur without the need for external energy (spontaneous) or not.

Spontaneity and Equilibrium:

Negative ΔG: Indicates a spontaneous process; the system releases free energy.

Positive ΔG: Indicates a non-spontaneous process; the system requires free energy input.

Zero ΔG: The system is at equilibrium; there is no net change in free energy.

In essence, Gibbs free energy is a concept that arises from the interaction of the laws of thermodynamics within a system. It encapsulates the balance between enthalpy changes and entropy changes to predict the natural direction of chemical processes and the capacity of a system to perform work. This understanding is vital for fields like chemistry, physics, and engineering, where controlling and predicting the behaviour of systems is essential.

X. Gibbs Free Energy and Equilibrium

To extend the thinking about Gibbs free energy and equilibrium to different types of systems – closed, open, and isolated – we need to consider how energy and matter are exchanged in each system and how this affects the path to equilibrium.

Closed Systems:

Characteristics: Closed systems can exchange energy but not matter with their surroundings.

Minimum Gibbs Free Energy: In a closed system, a state of equilibrium is reached when the Gibbs free energy is at its minimum because there is no exchange of matter to further affect the system’s composition.

Equilibrium: At equilibrium, the reaction quotient Q equals the equilibrium constant K, and the change in Gibbs free energy ΔG is zero, indicating no net change in the system’s free energy.

Open Systems:

Characteristics: Open systems can exchange both energy and matter with their surroundings.

Minimum Gibbs Free Energy: The concept of minimum Gibbs free energy in open systems is more complex due to the continuous input and output of matter. Equilibrium in open systems is dynamic, as the system can reach a steady state where the rates of input and output are balanced.

Equilibrium: The equilibrium in open systems is not static but is maintained by the flow of materials and energy through the system. The Gibbs free energy may not reach a minimum but will be stable over time as long as the external conditions remain constant.

Isolated Systems:

Characteristics: Isolated systems do not exchange energy or matter with their surroundings.

Minimum Gibbs Free Energy: In an isolated system, equilibrium is reached when the Gibbs free energy is at a minimum because there are no external influences to change the system’s state.

Equilibrium: At equilibrium, the system has reached a point where the Gibbs free energy is at its lowest possible value, and there will be no further change unless the system is disturbed from the outside.

In all cases, the drive towards equilibrium is a manifestation of the system’s tendency to minimize its Gibbs free energy. However, the path to achieving this minimum varies depending on whether the system is closed, open, or isolated. In closed and isolated systems, equilibrium corresponds to the minimum Gibbs free energy. In open systems, equilibrium is dynamic and maintained by the balance of flows, not necessarily at the minimum Gibbs free energy but at a point where the system’s composition remains constant over time.

Extending the concepts of Gibbs free energy and equilibrium to negentropy and the arrow of time involves understanding how systems evolve and maintain order within the universal trend towards increasing entropy.

Negentropy:

Definition: Negentropy, or negative entropy, measures the degree of order or organization in a system.

Relation to Gibbs Free Energy: A system with high negentropy is one where the Gibbs free energy is being effectively utilized to create and maintain order. In biological systems, for example, the energy from food or sunlight is used to build complex structures, reducing local entropy at the expense of increasing the entropy of the environment.

Arrow of Time:

Definition: The arrow of time refers to the one-way direction of time from the past to the future, characterized by the increase of entropy in an isolated system.

Relation to Gibbs Free Energy: While Gibbs free energy itself doesn’t dictate the direction of time, it is involved in processes that unfold over time. Spontaneous processes contribute to the forward arrow of time by increasing entropy. Non-spontaneous processes can locally decrease entropy, but only by increasing the entropy elsewhere, in accordance with the second law of thermodynamics.

When considering different types of systems:

Closed Systems: In closed systems, negentropy can be achieved temporarily as Gibbs free energy is minimized at equilibrium. However, the overall entropy, including the surroundings, will increase over time, contributing to the arrow of time.

Open Systems: Open systems can maintain a state of negentropy by continuously importing low-entropy energy and exporting high-entropy waste. This dynamic equilibrium supports the arrow of time by ensuring that while the system remains ordered, the universe’s entropy still increases.

Isolated Systems: Isolated systems will inevitably move towards maximum entropy, reinforcing the arrow of time. Negentropy cannot be sustained indefinitely in an isolated system because there is no energy input to maintain order.

In summary, negentropy and the arrow of time are interconnected through the laws of thermodynamics and the concept of Gibbs free energy. Negentropy represents the local decrease in entropy or increase in order, which is made possible by the utilization of Gibbs free energy. The arrow of time is the overall direction in which entropy increases, and it is a fundamental aspect of the universe that even negentropic processes must ultimately adhere to.

XI. Physical Asset Management and Sustainability

Applying first principles and systems thinking to the lifecycle of physical assets and the discipline of physical asset management in relation to Gibbs free energy, equilibrium, negentropy, and the arrow of time involves understanding the fundamental behaviours of these concepts and how they manifest in the management of physical assets.

Lifecycle of Physical Assets:

Concept: The lifecycle of a physical asset encompasses its creation, utilization, maintenance, and eventual disposal or renewal.

Systems Thinking: Each stage of the asset’s lifecycle can be seen as a system that requires energy input and management to maintain its utility and value.

Physical Asset Management:

Concept: Physical asset management is the systematic process of effectively overseeing the lifecycle of physical assets to maximize their value and minimize associated costs.

Systems Thinking: It involves managing the flow of resources and energy throughout the asset’s lifecycle to ensure optimal performance and longevity.

Gibbs Free Energy:

Concept: Gibbs free energy represents the maximum amount of work that can be extracted from a system at constant temperature and pressure.

Relation to Asset Management: In asset management, minimizing Gibbs free energy could be analogous to minimizing the energy required to maintain and operate an asset efficiently.

Equilibrium:

Concept: Equilibrium is the state where a system’s properties are stable and unchanging over time.

Relation to Asset Management: Achieving equilibrium in asset management means balancing the inputs and outputs to maintain the asset’s functionality without excessive energy expenditure or resource depletion.

Negentropy:

Concept: Negentropy refers to the creation of order from disorder, a decrease in entropy within a system.

Relation to Asset Management: Asset management aims to create negentropy by organizing and maintaining assets in an ordered state, counteracting the natural tendency towards disorder.

Arrow of Time:

Concept: The arrow of time is the directionality of time from the past to the future, characterized by the increase of entropy in an isolated system.

Relation to Asset Management: In asset management, the arrow of time is reflected in the aging and degradation of assets. Effective management seeks to slow this process, extending the useful life of the assets.

In essence, the discipline of physical asset management is about applying energy and resources in a structured way to maintain and extend the useful life of assets. It involves creating order and reducing entropy within the system of the asset’s lifecycle, while also acknowledging the inevitable increase in entropy over time due to the arrow of time. The goal is to reach a state of equilibrium where the assets are maintained efficiently, with minimal energy waste, reflecting the principles of Gibbs free energy and negentropy in a practical context.

Tying the principles of physical asset management and the lifecycle of physical assets back to the Passive House concept involves understanding how these principles are applied to create buildings that are sustainable, efficient, and have a long useful life.

Lifecycle of Physical Assets:

Passive House Application: The lifecycle of a Passive House is designed with sustainability in mind from the outset. From the choice of materials to construction methods, each phase is planned to minimize energy use and maximize the building’s lifespan.

Physical Asset Management:

Passive House Application: Managing a Passive House involves regular maintenance to ensure that its energy-saving features, such as insulation and air-tightness, continue to function optimally, thereby extending the asset’s life and maintaining its value.

Gibbs Free Energy:

Passive House Application: A Passive House is designed to minimize the need for external energy inputs for heating and cooling, analogous to minimizing Gibbs free energy in a chemical system. It uses the least amount of energy to maintain comfortable living conditions.

Equilibrium:

Passive House Application: A Passive House aims to achieve a state of equilibrium where the internal environment is stable and requires minimal energy input to maintain. This is achieved through design features that capitalize on natural energy sources and minimize energy loss.

Negentropy:

Passive House Application: By creating a highly ordered and efficient structure, a Passive House exemplifies negentropy in the built environment. It maintains a low-entropy state by reducing energy waste and maximizing the use of sustainable resources.

Arrow of Time:

Passive House Application: The Passive House design acknowledges the arrow of time through its durability and adaptability. It is built to withstand the test of time, slowing down the degradation process and maintaining functionality over a longer period than conventional buildings.

In essence, the Passive House concept is a practical application of the principles discussed. It is a manifestation of physical asset management that takes into account the laws of thermodynamics to create a living space that is both energy-efficient and sustainable. It seeks to minimize the rate of entropy increase within the building system while providing a comfortable and enduring habitat for its occupants. This aligns with the broader goal of sustainability, which is to manage and utilize resources in a way that maintains the health and stability of our systems over time.

Integrating the concepts of the lifecycle of physical assets, physical asset management, Gibbs free energy, equilibrium, negentropy, and the arrow of time into the pursuit of sustainability involves understanding how these concepts interrelate to create systems that are efficient, resilient, and capable of enduring over time within the constraints of our physical universe.

Lifecycle of Physical Assets:

Sustainability: The sustainable management of physical assets involves considering their entire lifecycle – from design and construction to operation, maintenance, and eventual decommissioning or repurposing. Sustainable practices aim to extend the useful life of assets and reduce the environmental impact at each stage.

Physical Asset Management:

Sustainability: Effective asset management ensures that resources are used optimally, waste is minimized, and the overall energy requirements are reduced. This aligns with sustainability goals by promoting efficiency and reducing the carbon footprint of asset operation and maintenance.

Gibbs Free Energy:

Sustainability: In the context of sustainability, minimizing Gibbs free energy means designing and operating systems that require the least amount of external energy input to function. This is akin to creating processes that are energetically favourable and contribute to a lower environmental impact.

Equilibrium:

Sustainability: Achieving equilibrium in a system means reaching a state where the system can operate effectively without excessive energy input or waste output. Sustainable systems aim for a balance that allows them to function efficiently over time.

Negentropy:

Sustainability: Negentropy in sustainability is about creating and maintaining order – such as efficient energy use, minimal waste, and high levels of organization – in a system that naturally tends towards disorder. Sustainable practices strive to maintain this order by managing resources and energy flows intelligently.

Arrow of Time:

Sustainability: The arrow of time reminds us that all systems will eventually move towards disorder. However, sustainable practices seek to slow this inevitable process by designing systems that are resilient and can adapt to changes over time, thus extending their period of usefulness and efficiency.

When we consider the Passive House concept, it embodies these principles by creating buildings that are designed to be energy-efficient (minimizing Gibbs free energy), maintain a stable internal environment (equilibrium), organize energy flows to maintain low entropy (negentropy), and are built to last for many years with minimal environmental impact (acknowledging the arrow of time). The Passive House standard is a model for sustainability because it applies these thermodynamic principles to create living spaces that require less energy, produce less waste, and provide long-term value.

In summary, the pursuit of sustainability is about applying these interconnected concepts to create systems that are efficient, resilient, and have minimal impact on the environment. It’s about intelligently managing the flow of energy and resources to maintain order and functionality in the face of the natural tendency towards increasing entropy, all while considering the long-term implications of the arrow of time.

XII. System-of-Systems Through the Lens of Thermodynamics: Microstates, Macrostates, Gibbs Free Energy, and Negentropy

By applying first principles thinking and a systems thinking mindset to the concepts of system-of-systems, microstates, and macrostates, we can dissect these concepts into their fundamental components and comprehend their interactions within larger frameworks.

System-of-Systems:

First Principles: A system-of-systems is an assembly of task-specific or dedicated systems that combine their resources and capabilities to form a new, more intricate system that offers greater functionality than the sum of its constituent parts.

Systems Thinking: Viewed from a systems perspective, each individual system within a system-of-systems retains its own operations and objectives. However, when these systems are integrated, they contribute to a shared capability. This integration often gives rise to emergent behaviours that are not found in the individual systems.

Microstates:

First Principles: In the realm of thermodynamics, a microstate is a detailed configuration of a system at a microscopic level, characterized by the precise states of all particles within the system.

Systems Thinking: Microstates symbolize the myriad possible arrangements that can culminate in a specific macrostate. In the context of a system-of-systems, each microstate could be interpreted as the particular state of each individual system at a specific moment.

Macrostates:

First Principles: A macrostate is delineated by the macroscopic properties of a system, such as temperature, pressure, and volume, which emerge from the collective behaviour of particles.

Systems Thinking: Macrostates are the discernible states of a system that materialize from the collective properties of microstates. In a system-of-systems, the macrostate would represent the overall behaviour or performance of the entire system assembly.

When these concepts are integrated, we observe:

Interactions and Dependencies: In a system-of-systems, the interactions between the individual systems (microstates) give rise to the overall behaviour (macrostate) of the entire ensemble. The dependencies and relationships among the systems dictate the efficiency and functionality of the system-of-systems.

Emergent Behaviour: Just as macrostates in thermodynamics emerge from the collective behaviour of microstates, emergent behaviours surface in a system-of-systems when the systems collaborate. These behaviours may be unpredictable and not inherent in the individual systems.

Evolution Over Time: Both in thermodynamics and in system-of-systems, there is a progression over time. Microstates continuously change, leading to different macrostates. Similarly, the components of a system-of-systems may evolve, giving rise to new capabilities or behaviours.

Sustainability and Efficiency: The quest for sustainability in a system-of-systems can be viewed as an endeavour to optimize the macrostate of the system to achieve peak efficiency and minimal entropy increase, aligning with the principles of negentropy and the arrow of time.

Applying first principles and systems thinking to these concepts enables us to unravel the complexity and dynamics of system-of-systems and their thermodynamic counterparts. It empowers us to analyse and design systems that are more efficient, sustainable, and adaptable by considering the fundamental interactions and behaviours at both micro and macro levels.

To broaden the concepts of system-of-systems, microstates, and macrostates to encompass Gibbs free energy and negentropy, we must contemplate how these thermodynamic principles apply to intricate, interconnected systems.

Gibbs Free Energy in System-of-Systems:

First Principles: Gibbs free energy (G) is a thermodynamic potential that quantifies the maximum amount of reversible work that a thermodynamic system can perform at a constant temperature and pressure.

Systems Thinking: In a system-of-systems, G can be perceived as the potential for the entire system to perform work or undergo change. A system-of-systems with lower Gibbs free energy is more stable and less prone to spontaneous change, whereas one with higher Gibbs free energy has a greater potential for change.

Negentropy in System-of-Systems:

First Principles: Negentropy, or negative entropy, denotes the degree of order or organization in a system, which is the antithesis of entropy, a measure of disorder.

Systems Thinking: In the context of a system-of-systems, negentropy signifies the organization and potential for maintaining order within the system. A system-of-systems with high negentropy is highly organized and efficient, with each subsystem contributing to the overall order and functionality.

Microstates and Macrostates:

First Principles: Microstates are the specific configurations at a microscopic level that lead to a macrostate, which is an observable state characterized by macroscopic properties like temperature and pressure.

Systems Thinking: In a system-of-systems, each subsystem can be considered a microstate that contributes to the overall macrostate. The collective behaviour of these microstates determines the macrostate of the system-of-systems.

Integration of Concepts:

Gibbs Free Energy and Microstates: The Gibbs free energy of a system-of-systems is influenced by the microstates of its subsystems. The more ways the subsystems can arrange themselves while maintaining a low Gibbs free energy, the more stable the overall system-of-systems.

Negentropy and Macrostates: The macrostate of a system-of-systems with high negentropy is one of order and low entropy. This implies that the system-of-systems is efficiently organized and less likely to spontaneously move towards disorder.

Sustainability and Efficiency:

Gibbs Free Energy: Sustainable system-of-systems strive to minimize Gibbs free energy, which means they are designed to operate efficiently with minimal energy input and waste output.

Negentropy: Sustainable system-of-systems also aim to maximize negentropy, maintaining a high level of order and organization to prevent the natural trend towards disorder.

Applying first principles and systems thinking to these concepts aids us in understanding how to design and manage complex systems in a manner that is sustainable, efficient, and resilient. By considering Gibbs free energy and negentropy, we can construct system-of-systems that are capable of performing their functions effectively while minimizing the inevitable increase in entropy over time, thus aligning with the pursuit of sustainability within the constraints of the arrow of time.

Incorporating the concepts of a portfolio of assets within the framework of system-of-systems and physical asset management necessitates understanding how various assets are interconnected and managed to achieve overarching organizational objectives. Here’s how these concepts interrelate:

Portfolio of Assets as a System-of-Systems:

First Principles: A portfolio of assets, such as those within a property or site, can be viewed as a system-of-systems because it comprises various subsystems (individual assets) that interact to achieve a common objective.

Systems Thinking: Each asset in the portfolio has its own lifecycle and requires individual management. However, when these assets are considered collectively, their interdependencies and collective impact on organizational performance must be managed holistically.

Hierarchy of Assets:

First Principles: Within a portfolio, assets can be organized into a hierarchy, where higher-level assets depend on the functionality of lower-level assets.

Systems Thinking: This hierarchical structure facilitates efficient management and maintenance, ensuring that the performance of one asset supports the performance of others, thereby optimizing the system-of-systems.

Physical Asset Management:

First Principles: Physical asset management is the coordinated activity of an organization to realize value from assets by managing them throughout their lifecycle.

Systems Thinking: In managing a system-of-systems, physical asset management ensures that each asset performs its intended function effectively, contributing to the overall performance of the portfolio.

Gibbs Free Energy and Negentropy:

First Principles: Minimizing Gibbs free energy and maximizing negentropy within a system-of-systems leads to stability and order.

Systems Thinking: Effective asset management seeks to minimize the energy (resources) required to maintain the portfolio while maximizing the order (negentropy), ensuring that the system-of-systems operates efficiently and sustainably.

Arrow of Time:

First Principles: The arrow of time dictates that systems naturally progress towards increased entropy.

Systems Thinking: Physical asset management within a system-of-systems aims to slow the progression of entropy by maintaining and optimizing assets, thus extending their useful life and value to the organization.

In conclusion, a portfolio of assets managed by the discipline of physical asset management is a complex system-of-systems that requires careful consideration of the interrelationships and dependencies among assets. By applying principles of Gibbs free energy, negentropy, and the arrow of time, organizations can manage their assets in a way that supports sustainability and efficiency, ultimately contributing to the long-term success of the organization.

XIII. Embracing Thermodynamic Wisdom: A Path Toward Principled Sustainability

As we approach the culmination of this journey through the realms of thermodynamics, systems theory, and sustainability, a profound realization emerges: while the pursuit of true sustainability may be an asymptotic ideal, our understanding of these fundamental principles equips us to navigate the intricate balance between progress and preservation.

By embracing the inescapable constraints imposed by the laws of thermodynamics and the ever-increasing entropy of our finite world, we can strive to optimize our stewardship of resources, design resilient systems, and cultivate a nuanced approach to sustainability that acknowledges both its limitations and its imperative necessity.

By applying first principles thinking, we dismantled the concept of enthalpy into its fundamental building blocks: internal energy, pressure, and volume. This deep dive revealed that enthalpy (H) is not just the internal heat content (E) of a system, but also considers the work done (PV) to create space for the system in its surroundings. Understanding these components allows us to grasp enthalpy’s key features:

State function: It depends only on the current state, not the path taken.

Heat content at constant pressure: It reflects the total heat a system holds, including internal energy and pressure-volume work.

Changes in enthalpy (ΔH): These reveal heat absorbed or released during processes, crucial for analysing chemical reactions and phase changes.

Conservation: The total enthalpy of an isolated system remains constant (first law of thermodynamics).

Predictive power: Knowing ΔH allows us to predict if a reaction is endothermic (absorbs heat) or exothermic (releases heat), vital in chemistry and engineering.

In essence, enthalpy empowers us to quantify energy changes in various processes, especially those involving heat exchange with the surroundings. This knowledge is instrumental in predicting system behaviour under different conditions, making it a cornerstone of chemistry, physics, and engineering.

First principles thinking allowed us to explore the essence of entropy (S), revealing it as a measure of disorder or randomness within a system. This randomness is quantified by the number of microscopic arrangements (microstates) that correspond to a macroscopic state. Here are the fundamental principles that govern entropy:

Second Law of Thermodynamics: Entropy, as dictated by the second law, dictates a natural tendency for disorder to increase in isolated systems over time.

State Function: Similar to enthalpy, entropy depends only on the current system state, independent of the path taken to reach it.

Unavailability of Energy: Increased entropy signifies a decrease in usable energy within a system.

Irreversibility: Entropy increases naturally, and significant external work is needed to reverse this process.

Microscopic vs. Macroscopic: Entropy bridges the gap between the microscopic world (number of microstates) and the macroscopic world (observable properties), explaining why some processes happen spontaneously while others don’t.

Understanding these principles equips us to grasp the inherent directionality of time and energy flow in the universe. This knowledge is foundational for various fields, including physics, chemistry, information theory, and even economics.

Entropy is intricately linked to the concept of the arrow of time, the one-way flow of time from past to future. This connection stems from the second law of thermodynamics, which dictates that entropy in an isolated system can only increase, not decrease. Here’s how these concepts intertwine:

Irreversible Processes and the Past: Entropy increases in spontaneous processes, making them irreversible. This irreversibility allows us to differentiate the past (lower entropy) from the future (higher entropy), essentially creating an arrow of time.

From Microscopic to Macroscopic: While microscopic interactions themselves are time-symmetric, the collective behaviour of particles on a larger scale leads to entropy increase, signifying the forward march of time.

Memory and the Universe: Our memory aligns with this concept – we remember the past, a lower entropy state, and not the unpredictable future state of higher entropy. Even the vast expansion of the universe is considered in relation to entropy.

In essence, entropy acts as a thermodynamic arrow of time, mirroring our everyday experiences of time’s flow. While enthalpy changes alone don’t dictate this arrow of time, they can be related in the context of energy transfer and reactions.

Enthalpy and Energy Transfer: Changes in enthalpy (endo/exothermic) reveal the direction of energy transfer, but don’t directly establish an arrow of time.

Irreversible Reactions and Entropy: Many chemical reactions proceed in a single direction, increasing entropy and indirectly contributing to the arrow of time.

Equilibrium, Spontaneity, and the Big Picture: While entropy governs the directionality of time, enthalpy, along with temperature, plays a role in determining the spontaneity of a process through Gibbs free energy.

Enthalpy changes, though not a direct measure, are part of the bigger thermodynamic picture that aligns with entropy increase and the forward flow of time. They provide insights into the energy dynamics within processes that, when coupled with entropy, solidify our understanding of the arrow of time.

The type of system – open, closed, or isolated – significantly impacts how entropy behaves and interacts with the arrow of time. While the second law of thermodynamics dictates an overall increase in entropy for isolated systems, surprise local decreases can occur in open and closed systems:

Open Systems: They exchange both energy and matter, allowing for localized entropy reduction by receiving low-entropy energy from outside or expelling high-entropy waste. However, the total entropy of the system plus its surroundings still increases.

Closed Systems: These systems can exchange energy but not matter. Localized entropy decrease can happen, like freezing water, but it’s accompanied by a greater entropy increase in the surroundings, ensuring the total entropy of the system and surroundings goes up or stays the same.

The key point is that the total entropy of the universe (isolated system) will always increase or remain constant, even with localized entropy decreases. This universal truth underpins the thermodynamic arrow of time, where entropy points us from the lower-entropy past to the higher-entropy future.

Negative entropy changes might seem counterintuitive, but they are possible in open and closed systems due to energy exchange:

Local Entropy Reduction: Open and closed systems can experience localized decreases, like water freezing. This lowers the system’s entropy but increases the entropy of the surroundings, ensuring the total change remains non-negative.

Negentropy: This term describes localized order and is used in information theory. It doesn’t violate the second law because it’s a relative measure. Living things exemplify negentropy by using low-entropy resources (food, sunlight) to maintain a low-entropy state, constantly expelling high-entropy waste.

Understanding how different systems interact with entropy sheds light on the arrow of time and various natural processes, including life itself. Negentropy, the opposite of entropy, measures a system’s order or organization. Introduced by Erwin Schrödinger, it’s a crucial concept in various fields like information theory, biology, and thermodynamics. Here’s how negentropy plays a significant role:

Order and Information: Negentropy signifies a system’s deviation from randomness, often quantified by its distance from a normal distribution (maximum entropy state). In simpler terms, it reflects the degree of order and organization within a system.

Life and Complexity: Living things exemplify negentropy by constantly creating order. They import low-entropy materials (food, sunlight) and expel high-entropy waste, maintaining a state of low entropy and enabling complex structures.

Energy and Information Transfer: High-quality energy sources like electricity have low entropy (high negentropy), while low-quality heat has high entropy (low negentropy). In information theory, negentropy relates to the amount of information or predictability in a system. Systems with high negentropy are less uncertain and more predictable.

There’s also a connection between negentropy and Gibbs free energy, which influences how much work can be extracted from a system. Negentropy is fundamentally linked to life itself. Living systems defy the overall trend of increasing entropy in the universe by creating order internally through:

Order from Disorder: Life takes low-entropy inputs (food, sunlight) and converts them into high-entropy outputs (waste), all while maintaining its own internal order and complexity.

Energy Utilization: Living things capture and utilize environmental energy to build and maintain complex structures. This energy conversion is a prime example of negentropy at work.

Thermodynamic Advantage: Living systems aren’t isolated; they exchange matter and energy with the environment. This exchange allows them to maintain or even decrease their internal entropy, creating a thermodynamic advantage.

Evolution and Information Storage: From an evolutionary viewpoint, negentropy is a driving force. Organisms adept at using energy to create order are more likely to survive and reproduce. Additionally, the maintenance and replication of DNA, a highly ordered structure that stores genetic information, is another example of a negentropy-driven process.

In essence, negentropy underpins life’s ability to maintain order, function, and complexity in an inherently disordered universe. It’s a cornerstone concept for understanding the physical principles that govern the existence and sustainability of life.

Human creations, from machines and buildings to AI, all embody the concept of negentropy. They represent our ability to organize energy and resources into structured forms, defying the natural tendency towards disorder.

Machines, Buildings, and Technology: These advancements demonstrate the human knack for creating order. Machines efficiently convert energy, buildings provide organized spaces, and technology strives for less entropic information processing.

Artificial Intelligence: AI systems structure information to reduce uncertainty and enhance predictability, another facet of negentropy.

Passive House Example: This energy-efficient building standard exemplifies human efforts to minimize entropy. However, the construction process itself and the energy used still contribute to entropy increase elsewhere.

Even with these creations, the second law of thermodynamics holds. While we create localized order, the overall entropy of the universe increases. The key message is that localized reductions in entropy come with a bigger entropy increase somewhere else. Human creations minimize this increase through efficiency, but they cannot defy the fundamental principle of increasing total entropy in the universe.

Sustainability is inherently linked to managing entropy. While the second law of thermodynamics dictates an inevitable increase in entropy, we can strive to minimize the rate of this increase and extend the lifespan of our systems.

Sustainability Strategies: Efforts like increasing efficiency, utilizing renewable resources, and recycling materials all contribute to minimizing waste and slowing entropy increase. Technological innovation plays a crucial role in developing processes that minimize energy consumption and maximize waste reuse.

Temporal Horizon: Sustainability acknowledges that complete entropy prevention is impossible. The goal is to manage entropy strategically to extend the viability of ecological and human-made systems for as long as possible.

Growth and Equilibrium: Maintaining any system, whether growing, dynamically stable, or even statically stable, requires a continuous inflow of energy and resources. This is because some energy is inevitably lost as waste heat, necessitating a constant supply to compensate for this inefficiency.

The World System: Applied globally, this principle highlights the importance of the sun’s energy and the Earth’s resources for sustaining our planet’s ecosystem, which includes human societies. Sustainable practices aim to manage these resource flows effectively for the long-term health and stability of our world system.

In essence, understanding entropy and its implications allows us to develop strategies for responsible resource management, ultimately promoting a more sustainable future. Revisiting the laws of thermodynamics through the lens of entropy, we gain a deeper appreciation for how energy and disorder behave within physical systems. Here’s a recap:

Zeroth Law: This law establishes the foundation for temperature measurement and thermal equilibrium.

First Law: Energy conservation is a core principle, reflected in the concept of enthalpy (heat transfer).

Second Law: Introducing the arrow of time, this law dictates the universe’s natural tendency towards increasing entropy (disorder). However, localized order (negentropy) can occur with energy input.

Third Law: Setting a reference point for entropy, this law highlights the impossibility of reaching absolute zero temperature in a finite number of steps.

Understanding these laws is crucial for grasping sustainability principles and the challenges of minimizing entropy increase. Furthermore, the concept of Gibbs free energy emerges from these fundamental laws:

Connecting the Laws: Gibbs free energy combines the principles of energy conservation (First Law) and entropy change (Second Law) to predict the spontaneity of processes within a system.

Spontaneity and Work: A negative ΔG signifies a spontaneous process where the system releases usable energy. Positive ΔG indicates a non-spontaneous process requiring external energy input. At zero ΔG, the system reaches equilibrium with no net change in free energy.

In essence, Gibbs free energy, arising from the interplay of the laws of thermodynamics, predicts the natural course of chemical reactions and a system’s capacity to do work. This understanding is fundamental in fields like chemistry, physics, and engineering, where controlling and predicting system behaviour is essential.

Understanding how Gibbs Free Energy interacts with different systems (closed, open, isolated) is crucial for exploring negentropy and the arrow of time.

Equilibrium and System Types: Closed and isolated systems reach equilibrium at minimum Gibbs free energy. Open systems achieve a dynamic equilibrium through a constant flow of matter and energy, maintaining a stable composition rather than a minimum free energy state.

Negentropy and Order: Negentropy signifies a system’s order. High negentropy reflects the efficient use of Gibbs free energy to create and sustain order, as seen in biological systems that use energy to build complex structures.

The Arrow of Time: While not directly dictating time’s direction, Gibbs free energy is involved in time-dependent processes. Spontaneous processes (negative ΔG) contribute to the forward march of time by increasing entropy. Even processes that decrease local entropy (negentropic) ultimately increase entropy elsewhere, adhering to the second law.

Negentropy and Different Systems: Closed systems achieve temporary negentropy, but overall entropy increases with time. Open systems maintain a dynamic negentropy by exchanging low-entropy energy with the surroundings. Isolated systems cannot sustain negentropy indefinitely due to the lack of external energy input.

In essence, negentropy and the arrow of time are intertwined through thermodynamics and Gibbs free energy. Negentropy represents localized order creation using Gibbs free energy, but even these processes cannot defy the universe’s inherent tendency towards increasing entropy, the arrow of time.

Physical Asset Management and the lifecycle of physical assets can be understood through the lens of thermodynamics. Concepts like Gibbs Free Energy (minimizing energy use), equilibrium (efficient operation), negentropy (order and reduced entropy), and the arrow of time (aging and degradation) are all relevant to maximizing an asset’s lifespan and value.

Physical Asset Management and Lifecycle: Understanding the lifecycle stages of physical assets and applying asset management principles are crucial. This involves optimizing energy use and resource allocation throughout an asset’s lifespan.

Connecting to Thermodynamics: Concepts like Gibbs free energy (minimizing energy input), equilibrium (efficient operation), and negentropy (order creation) all play a role in effective asset management.

Arrow of Time: While entropy inevitably increases, asset management strategies can slow down degradation and extend an asset’s useful life.

The Passive House as a Model: The Passive House concept exemplifies these principles in action. It prioritizes minimal energy use (Gibbs free energy), a stable indoor environment (equilibrium), and a low-entropy state through efficient design and resource utilization (negentropy). Furthermore, its durable construction acknowledges the arrow of time.

Sustainability in Focus: Sustainable practices echo these thermodynamic principles. The goal is to design and operate systems that are efficient, minimize waste, and endure over time. This strategy helps us manage resources wisely and reduce our environmental impact.

In essence, understanding these interconnected concepts empowers us to build a more sustainable future. By mimicking the principles exemplified by the Passive House model, we can create systems that are both functional and environmentally responsible, minimizing our footprint on the planet.